Denoising Autoencoder

from keras.models import Model

from keras.layers import Dense, Input

from keras.datasets import mnist

import numpy as np

batch_size = 128

nb_epoch = 100

img_rows, img_cols = 28, 28

nb_visible = img_rows * img_cols

nb_hidden = 500

corruption_level = 0.3

Using TensorFlow backend.

(x_train, _), (x_test, _) = mnist.load_data()

x_train = x_train.astype('float32') / 255.

x_test = x_test.astype('float32') / 255.

x_train = x_train.reshape((len(x_train), np.prod(x_train.shape[1:])))

x_test = x_test.reshape((len(x_test), np.prod(x_test.shape[1:])))

print(x_train.shape)

print(x_test.shape)

(60000, 784)

(10000, 784)

x_train_noisy = x_train + corruption_level * np.random.normal(loc=0.0, scale=1.0, size=x_train.shape)

x_test_noisy = x_test + corruption_level * np.random.normal(loc=0.0, scale=1.0, size=x_test.shape)

x_train_noisy = np.clip(x_train_noisy, 0., 1.)

x_test_noisy = np.clip(x_test_noisy, 0., 1.)

print(x_train_noisy.shape)

print(x_test_noisy.shape)

(60000, 784)

(10000, 784)

input_img = Input(shape=(nb_visible,))

encoded = Dense(nb_hidden, activation='relu')(input_img)

decoded = Dense(nb_visible, activation='sigmoid')(encoded)

autoencoder = Model(input=input_img, output=decoded)

autoencoder.compile(optimizer='adadelta', loss='binary_crossentropy')

autoencoder.summary()

____________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

====================================================================================================

input_1 (InputLayer) (None, 784) 0

____________________________________________________________________________________________________

dense_1 (Dense) (None, 500) 392500 input_1[0][0]

____________________________________________________________________________________________________

dense_2 (Dense) (None, 784) 392784 dense_1[0][0]

====================================================================================================

Total params: 785284

____________________________________________________________________________________________________

autoencoder.fit(x_train_noisy, x_train,

nb_epoch=nb_epoch, batch_size=batch_size, shuffle=True, verbose=1,

validation_data=(x_test_noisy, x_test))

Train on 60000 samples, validate on 10000 samples

Epoch 1/100

60000/60000 [==============================] - 3s - loss: 0.2751 - val_loss: 0.2242

Epoch 2/100

60000/60000 [==============================] - 3s - loss: 0.2033 - val_loss: 0.1844

Epoch 3/100

60000/60000 [==============================] - 3s - loss: 0.1745 - val_loss: 0.1633

Epoch 4/100

60000/60000 [==============================] - 3s - loss: 0.1572 - val_loss: 0.1490

Epoch 5/100

60000/60000 [==============================] - 2s - loss: 0.1450 - val_loss: 0.1387

Epoch 6/100

60000/60000 [==============================] - 3s - loss: 0.1360 - val_loss: 0.1309

Epoch 7/100

60000/60000 [==============================] - 3s - loss: 0.1292 - val_loss: 0.1249

Epoch 8/100

60000/60000 [==============================] - 3s - loss: 0.1238 - val_loss: 0.1201

Epoch 9/100

60000/60000 [==============================] - 3s - loss: 0.1195 - val_loss: 0.1163

Epoch 10/100

60000/60000 [==============================] - 3s - loss: 0.1160 - val_loss: 0.1131

Epoch 11/100

60000/60000 [==============================] - 2s - loss: 0.1131 - val_loss: 0.1105

Epoch 12/100

60000/60000 [==============================] - 3s - loss: 0.1106 - val_loss: 0.1082

Epoch 13/100

60000/60000 [==============================] - 3s - loss: 0.1085 - val_loss: 0.1063

Epoch 14/100

60000/60000 [==============================] - 3s - loss: 0.1067 - val_loss: 0.1046

Epoch 15/100

60000/60000 [==============================] - 3s - loss: 0.1051 - val_loss: 0.1032

Epoch 16/100

60000/60000 [==============================] - 3s - loss: 0.1037 - val_loss: 0.1019

Epoch 17/100

60000/60000 [==============================] - 3s - loss: 0.1025 - val_loss: 0.1008

Epoch 18/100

60000/60000 [==============================] - 3s - loss: 0.1014 - val_loss: 0.0997

Epoch 19/100

60000/60000 [==============================] - 3s - loss: 0.1003 - val_loss: 0.0988

Epoch 20/100

60000/60000 [==============================] - 3s - loss: 0.0994 - val_loss: 0.0980

Epoch 21/100

60000/60000 [==============================] - 3s - loss: 0.0986 - val_loss: 0.0972

Epoch 22/100

60000/60000 [==============================] - 2s - loss: 0.0979 - val_loss: 0.0965

Epoch 23/100

60000/60000 [==============================] - 2s - loss: 0.0972 - val_loss: 0.0959

Epoch 24/100

60000/60000 [==============================] - 3s - loss: 0.0965 - val_loss: 0.0953

Epoch 25/100

60000/60000 [==============================] - 3s - loss: 0.0959 - val_loss: 0.0948

Epoch 26/100

60000/60000 [==============================] - 3s - loss: 0.0954 - val_loss: 0.0943

Epoch 27/100

60000/60000 [==============================] - 3s - loss: 0.0949 - val_loss: 0.0938

Epoch 28/100

60000/60000 [==============================] - 3s - loss: 0.0944 - val_loss: 0.0934

Epoch 29/100

60000/60000 [==============================] - 3s - loss: 0.0939 - val_loss: 0.0929

Epoch 30/100

60000/60000 [==============================] - 2s - loss: 0.0935 - val_loss: 0.0926

Epoch 31/100

60000/60000 [==============================] - 3s - loss: 0.0931 - val_loss: 0.0922

Epoch 32/100

60000/60000 [==============================] - 2s - loss: 0.0928 - val_loss: 0.0919

Epoch 33/100

60000/60000 [==============================] - 3s - loss: 0.0924 - val_loss: 0.0916

Epoch 34/100

60000/60000 [==============================] - 2s - loss: 0.0921 - val_loss: 0.0913

Epoch 35/100

60000/60000 [==============================] - 3s - loss: 0.0918 - val_loss: 0.0910

Epoch 36/100

60000/60000 [==============================] - 3s - loss: 0.0915 - val_loss: 0.0907

Epoch 37/100

60000/60000 [==============================] - 3s - loss: 0.0912 - val_loss: 0.0904

Epoch 38/100

60000/60000 [==============================] - 3s - loss: 0.0909 - val_loss: 0.0902

Epoch 39/100

60000/60000 [==============================] - 3s - loss: 0.0906 - val_loss: 0.0900

Epoch 40/100

60000/60000 [==============================] - 2s - loss: 0.0904 - val_loss: 0.0897

Epoch 41/100

60000/60000 [==============================] - 3s - loss: 0.0902 - val_loss: 0.0895

Epoch 42/100

60000/60000 [==============================] - 3s - loss: 0.0899 - val_loss: 0.0893

Epoch 43/100

60000/60000 [==============================] - 3s - loss: 0.0897 - val_loss: 0.0891

Epoch 44/100

60000/60000 [==============================] - 3s - loss: 0.0895 - val_loss: 0.0890

Epoch 45/100

60000/60000 [==============================] - 2s - loss: 0.0893 - val_loss: 0.0888

Epoch 46/100

60000/60000 [==============================] - 3s - loss: 0.0891 - val_loss: 0.0886

Epoch 47/100

60000/60000 [==============================] - 3s - loss: 0.0890 - val_loss: 0.0884

Epoch 48/100

60000/60000 [==============================] - 3s - loss: 0.0888 - val_loss: 0.0883

Epoch 49/100

60000/60000 [==============================] - 3s - loss: 0.0886 - val_loss: 0.0881

Epoch 50/100

60000/60000 [==============================] - 3s - loss: 0.0884 - val_loss: 0.0880

Epoch 51/100

60000/60000 [==============================] - 3s - loss: 0.0883 - val_loss: 0.0878

Epoch 52/100

60000/60000 [==============================] - 3s - loss: 0.0881 - val_loss: 0.0877

Epoch 53/100

60000/60000 [==============================] - 2s - loss: 0.0880 - val_loss: 0.0876

Epoch 54/100

60000/60000 [==============================] - 3s - loss: 0.0878 - val_loss: 0.0874

Epoch 55/100

60000/60000 [==============================] - 3s - loss: 0.0877 - val_loss: 0.0873

Epoch 56/100

60000/60000 [==============================] - 2s - loss: 0.0876 - val_loss: 0.0872

Epoch 57/100

60000/60000 [==============================] - 3s - loss: 0.0874 - val_loss: 0.0871

Epoch 58/100

60000/60000 [==============================] - 3s - loss: 0.0873 - val_loss: 0.0870

Epoch 59/100

60000/60000 [==============================] - 3s - loss: 0.0872 - val_loss: 0.0869

Epoch 60/100

60000/60000 [==============================] - 3s - loss: 0.0871 - val_loss: 0.0868

Epoch 61/100

60000/60000 [==============================] - 3s - loss: 0.0870 - val_loss: 0.0867

Epoch 62/100

60000/60000 [==============================] - 3s - loss: 0.0868 - val_loss: 0.0866

Epoch 63/100

60000/60000 [==============================] - 3s - loss: 0.0867 - val_loss: 0.0865

Epoch 64/100

60000/60000 [==============================] - 2s - loss: 0.0866 - val_loss: 0.0864

Epoch 65/100

60000/60000 [==============================] - 3s - loss: 0.0865 - val_loss: 0.0863

Epoch 66/100

60000/60000 [==============================] - 3s - loss: 0.0864 - val_loss: 0.0862

Epoch 67/100

60000/60000 [==============================] - 3s - loss: 0.0863 - val_loss: 0.0861

Epoch 68/100

60000/60000 [==============================] - 3s - loss: 0.0862 - val_loss: 0.0860

Epoch 69/100

60000/60000 [==============================] - 3s - loss: 0.0861 - val_loss: 0.0859

Epoch 70/100

60000/60000 [==============================] - 3s - loss: 0.0860 - val_loss: 0.0859

Epoch 71/100

60000/60000 [==============================] - 3s - loss: 0.0860 - val_loss: 0.0858

Epoch 72/100

60000/60000 [==============================] - 3s - loss: 0.0859 - val_loss: 0.0857

Epoch 73/100

60000/60000 [==============================] - 3s - loss: 0.0858 - val_loss: 0.0857

Epoch 74/100

60000/60000 [==============================] - 3s - loss: 0.0857 - val_loss: 0.0856

Epoch 75/100

60000/60000 [==============================] - 3s - loss: 0.0856 - val_loss: 0.0855

Epoch 76/100

60000/60000 [==============================] - 2s - loss: 0.0855 - val_loss: 0.0854

Epoch 77/100

60000/60000 [==============================] - 2s - loss: 0.0855 - val_loss: 0.0854

Epoch 78/100

60000/60000 [==============================] - 3s - loss: 0.0854 - val_loss: 0.0853

Epoch 79/100

60000/60000 [==============================] - 3s - loss: 0.0853 - val_loss: 0.0852

Epoch 80/100

60000/60000 [==============================] - 2s - loss: 0.0852 - val_loss: 0.0852

Epoch 81/100

60000/60000 [==============================] - 3s - loss: 0.0852 - val_loss: 0.0851

Epoch 82/100

60000/60000 [==============================] - 3s - loss: 0.0851 - val_loss: 0.0850

Epoch 83/100

60000/60000 [==============================] - 3s - loss: 0.0850 - val_loss: 0.0850

Epoch 84/100

60000/60000 [==============================] - 3s - loss: 0.0850 - val_loss: 0.0849

Epoch 85/100

60000/60000 [==============================] - 3s - loss: 0.0849 - val_loss: 0.0849

Epoch 86/100

60000/60000 [==============================] - 3s - loss: 0.0848 - val_loss: 0.0848

Epoch 87/100

60000/60000 [==============================] - 3s - loss: 0.0848 - val_loss: 0.0848

Epoch 88/100

60000/60000 [==============================] - 3s - loss: 0.0847 - val_loss: 0.0847

Epoch 89/100

60000/60000 [==============================] - 3s - loss: 0.0847 - val_loss: 0.0847

Epoch 90/100

60000/60000 [==============================] - 3s - loss: 0.0846 - val_loss: 0.0846

Epoch 91/100

60000/60000 [==============================] - 3s - loss: 0.0846 - val_loss: 0.0846

Epoch 92/100

60000/60000 [==============================] - 3s - loss: 0.0845 - val_loss: 0.0845

Epoch 93/100

60000/60000 [==============================] - 2s - loss: 0.0844 - val_loss: 0.0845

Epoch 94/100

60000/60000 [==============================] - 3s - loss: 0.0844 - val_loss: 0.0844

Epoch 95/100

60000/60000 [==============================] - 3s - loss: 0.0843 - val_loss: 0.0844

Epoch 96/100

60000/60000 [==============================] - 2s - loss: 0.0843 - val_loss: 0.0843

Epoch 97/100

60000/60000 [==============================] - 3s - loss: 0.0842 - val_loss: 0.0843

Epoch 98/100

60000/60000 [==============================] - 3s - loss: 0.0842 - val_loss: 0.0842

Epoch 99/100

60000/60000 [==============================] - 3s - loss: 0.0841 - val_loss: 0.0842

Epoch 100/100

60000/60000 [==============================] - 2s - loss: 0.0841 - val_loss: 0.0842

<keras.callbacks.History at 0x7f905a312ba8>

evaluation = autoencoder.evaluate(x_test_noisy, x_test, batch_size=batch_size, verbose=1)

print('\nSummary: Loss over the test dataset: %.2f' % (evaluation))

9856/10000 [============================>.] - ETA: 0s

Summary: Loss over the test dataset: 0.08

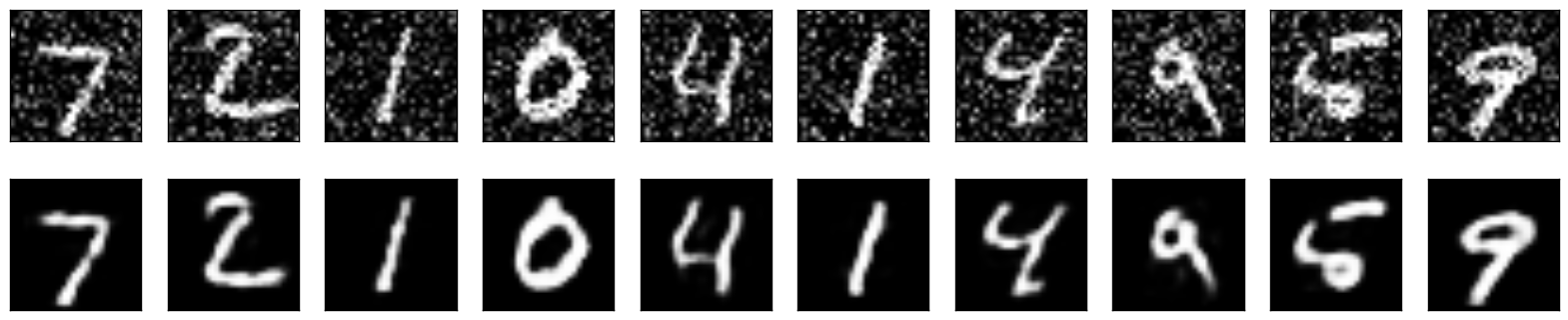

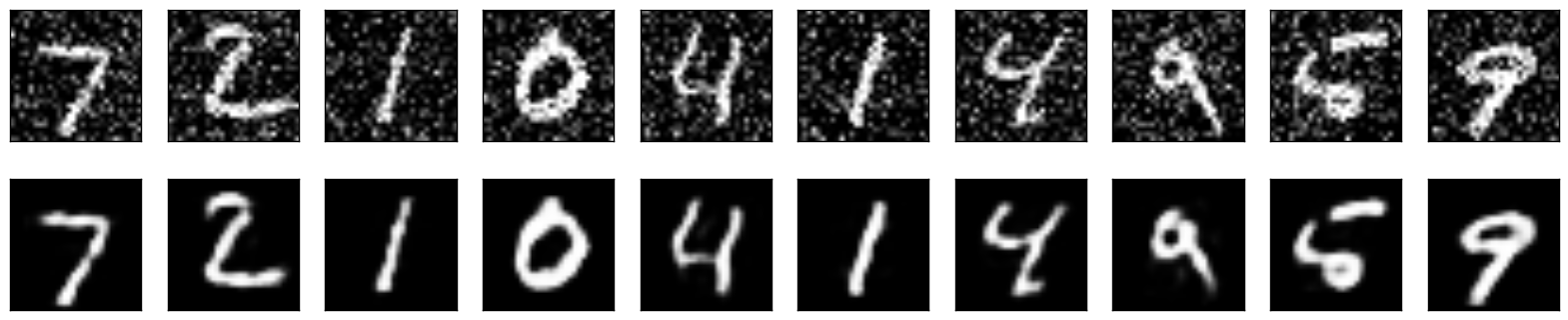

import matplotlib.pyplot as plt

%matplotlib inline

decoded_imgs = autoencoder.predict(x_test_noisy)

n = 10

plt.figure(figsize=(20, 4))

for i in range(n):

ax = plt.subplot(2, n, i + 1)

plt.imshow(x_test_noisy[i].reshape(28, 28))

plt.gray()

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

ax = plt.subplot(2, n, i + 1 + n)

plt.imshow(decoded_imgs[i].reshape(28, 28))

plt.gray()

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

plt.show()