Convolutional Neural Network Example

Build a convolutional neural network with TensorFlow.

This example is using TensorFlow layers API, see 'convolutional_network_raw' example for a raw TensorFlow implementation with variables.

- Author: Aymeric Damien

- Project: https://github.com/aymericdamien/TensorFlow-Examples/

CNN Overview

MNIST Dataset Overview

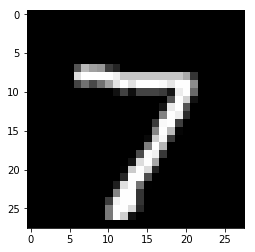

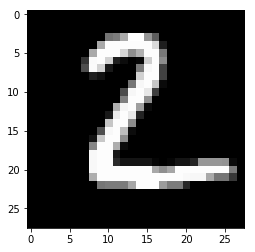

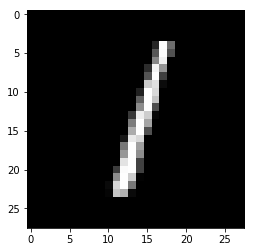

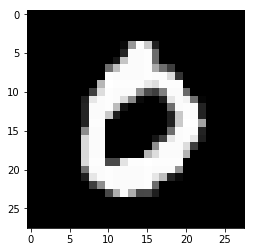

This example is using MNIST handwritten digits. The dataset contains 60,000 examples for training and 10,000 examples for testing. The digits have been size-normalized and centered in a fixed-size image (28x28 pixels) with values from 0 to 1. For simplicity, each image has been flattened and converted to a 1-D numpy array of 784 features (28*28).

More info: http://yann.lecun.com/exdb/mnist/

from __future__ import division, print_function, absolute_import

# Import MNIST data

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets("/tmp/data/", one_hot=False)

import tensorflow as tf

import matplotlib.pyplot as plt

import numpy as np

Extracting /tmp/data/train-images-idx3-ubyte.gz

Extracting /tmp/data/train-labels-idx1-ubyte.gz

Extracting /tmp/data/t10k-images-idx3-ubyte.gz

Extracting /tmp/data/t10k-labels-idx1-ubyte.gz

# Training Parameters

learning_rate = 0.001

num_steps = 2000

batch_size = 128

# Network Parameters

num_input = 784 # MNIST data input (img shape: 28*28)

num_classes = 10 # MNIST total classes (0-9 digits)

dropout = 0.25 # Dropout, probability to drop a unit

# Create the neural network

def conv_net(x_dict, n_classes, dropout, reuse, is_training):

# Define a scope for reusing the variables

with tf.variable_scope('ConvNet', reuse=reuse):

# TF Estimator input is a dict, in case of multiple inputs

x = x_dict['images']

# MNIST data input is a 1-D vector of 784 features (28*28 pixels)

# Reshape to match picture format [Height x Width x Channel]

# Tensor input become 4-D: [Batch Size, Height, Width, Channel]

x = tf.reshape(x, shape=[-1, 28, 28, 1])

# Convolution Layer with 32 filters and a kernel size of 5

conv1 = tf.layers.conv2d(x, 32, 5, activation=tf.nn.relu)

# Max Pooling (down-sampling) with strides of 2 and kernel size of 2

conv1 = tf.layers.max_pooling2d(conv1, 2, 2)

# Convolution Layer with 64 filters and a kernel size of 3

conv2 = tf.layers.conv2d(conv1, 64, 3, activation=tf.nn.relu)

# Max Pooling (down-sampling) with strides of 2 and kernel size of 2

conv2 = tf.layers.max_pooling2d(conv2, 2, 2)

# Flatten the data to a 1-D vector for the fully connected layer

fc1 = tf.contrib.layers.flatten(conv2)

# Fully connected layer (in tf contrib folder for now)

fc1 = tf.layers.dense(fc1, 1024)

# Apply Dropout (if is_training is False, dropout is not applied)

fc1 = tf.layers.dropout(fc1, rate=dropout, training=is_training)

# Output layer, class prediction

out = tf.layers.dense(fc1, n_classes)

return out

# Define the model function (following TF Estimator Template)

def model_fn(features, labels, mode):

# Build the neural network

# Because Dropout have different behavior at training and prediction time, we

# need to create 2 distinct computation graphs that still share the same weights.

logits_train = conv_net(features, num_classes, dropout, reuse=False, is_training=True)

logits_test = conv_net(features, num_classes, dropout, reuse=True, is_training=False)

# Predictions

pred_classes = tf.argmax(logits_test, axis=1)

pred_probas = tf.nn.softmax(logits_test)

# If prediction mode, early return

if mode == tf.estimator.ModeKeys.PREDICT:

return tf.estimator.EstimatorSpec(mode, predictions=pred_classes)

# Define loss and optimizer

loss_op = tf.reduce_mean(tf.nn.sparse_softmax_cross_entropy_with_logits(

logits=logits_train, labels=tf.cast(labels, dtype=tf.int32)))

optimizer = tf.train.AdamOptimizer(learning_rate=learning_rate)

train_op = optimizer.minimize(loss_op, global_step=tf.train.get_global_step())

# Evaluate the accuracy of the model

acc_op = tf.metrics.accuracy(labels=labels, predictions=pred_classes)

# TF Estimators requires to return a EstimatorSpec, that specify

# the different ops for training, evaluating, ...

estim_specs = tf.estimator.EstimatorSpec(

mode=mode,

predictions=pred_classes,

loss=loss_op,

train_op=train_op,

eval_metric_ops={'accuracy': acc_op})

return estim_specs

# Build the Estimator

model = tf.estimator.Estimator(model_fn)

INFO:tensorflow:Using default config.

WARNING:tensorflow:Using temporary folder as model directory: /tmp/tmpdhd6F4

INFO:tensorflow:Using config: {'_save_checkpoints_secs': 600, '_session_config': None, '_keep_checkpoint_max': 5, '_tf_random_seed': 1, '_keep_checkpoint_every_n_hours': 10000, '_log_step_count_steps': 100, '_save_checkpoints_steps': None, '_model_dir': '/tmp/tmpdhd6F4', '_save_summary_steps': 100}

# Define the input function for training

input_fn = tf.estimator.inputs.numpy_input_fn(

x={'images': mnist.train.images}, y=mnist.train.labels,

batch_size=batch_size, num_epochs=None, shuffle=True)

# Train the Model

model.train(input_fn, steps=num_steps)

INFO:tensorflow:Create CheckpointSaverHook.

INFO:tensorflow:Saving checkpoints for 1 into /tmp/tmpdhd6F4/model.ckpt.

INFO:tensorflow:loss = 2.39026, step = 1

INFO:tensorflow:global_step/sec: 238.314

INFO:tensorflow:loss = 0.237997, step = 101 (0.421 sec)

INFO:tensorflow:global_step/sec: 255.312

INFO:tensorflow:loss = 0.0954537, step = 201 (0.392 sec)

INFO:tensorflow:global_step/sec: 257.194

INFO:tensorflow:loss = 0.121477, step = 301 (0.389 sec)

INFO:tensorflow:global_step/sec: 255.018

INFO:tensorflow:loss = 0.0539927, step = 401 (0.392 sec)

INFO:tensorflow:global_step/sec: 254.293

INFO:tensorflow:loss = 0.0440369, step = 501 (0.393 sec)

INFO:tensorflow:global_step/sec: 256.501

INFO:tensorflow:loss = 0.0247431, step = 601 (0.390 sec)

INFO:tensorflow:global_step/sec: 252.956

INFO:tensorflow:loss = 0.0738082, step = 701 (0.395 sec)

INFO:tensorflow:global_step/sec: 253.222

INFO:tensorflow:loss = 0.134998, step = 801 (0.395 sec)

INFO:tensorflow:global_step/sec: 255.606

INFO:tensorflow:loss = 0.00438448, step = 901 (0.391 sec)

INFO:tensorflow:global_step/sec: 256.306

INFO:tensorflow:loss = 0.0471991, step = 1001 (0.390 sec)

INFO:tensorflow:global_step/sec: 255.352

INFO:tensorflow:loss = 0.0371172, step = 1101 (0.392 sec)

INFO:tensorflow:global_step/sec: 253.277

INFO:tensorflow:loss = 0.0129522, step = 1201 (0.395 sec)

INFO:tensorflow:global_step/sec: 252.49

INFO:tensorflow:loss = 0.039862, step = 1301 (0.396 sec)

INFO:tensorflow:global_step/sec: 253.902

INFO:tensorflow:loss = 0.0520571, step = 1401 (0.394 sec)

INFO:tensorflow:global_step/sec: 255.572

INFO:tensorflow:loss = 0.0307549, step = 1501 (0.392 sec)

INFO:tensorflow:global_step/sec: 254.32

INFO:tensorflow:loss = 0.0108862, step = 1601 (0.393 sec)

INFO:tensorflow:global_step/sec: 255.62

INFO:tensorflow:loss = 0.0294434, step = 1701 (0.391 sec)

INFO:tensorflow:global_step/sec: 254.349

INFO:tensorflow:loss = 0.0179781, step = 1801 (0.393 sec)

INFO:tensorflow:global_step/sec: 255.508

INFO:tensorflow:loss = 0.0375271, step = 1901 (0.391 sec)

INFO:tensorflow:Saving checkpoints for 2000 into /tmp/tmpdhd6F4/model.ckpt.

INFO:tensorflow:Loss for final step: 0.00440777.

<tensorflow.python.estimator.estimator.Estimator at 0x7fb80ca55c90>

# Evaluate the Model

# Define the input function for evaluating

input_fn = tf.estimator.inputs.numpy_input_fn(

x={'images': mnist.test.images}, y=mnist.test.labels,

batch_size=batch_size, shuffle=False)

# Use the Estimator 'evaluate' method

model.evaluate(input_fn)

INFO:tensorflow:Starting evaluation at 2017-08-21-14:25:29

INFO:tensorflow:Restoring parameters from /tmp/tmpdhd6F4/model.ckpt-2000

INFO:tensorflow:Finished evaluation at 2017-08-21-14:25:29

INFO:tensorflow:Saving dict for global step 2000: accuracy = 0.9908, global_step = 2000, loss = 0.0382241

{'accuracy': 0.99080002, 'global_step': 2000, 'loss': 0.038224086}

# Predict single images

n_images = 4

# Get images from test set

test_images = mnist.test.images[:n_images]

# Prepare the input data

input_fn = tf.estimator.inputs.numpy_input_fn(

x={'images': test_images}, shuffle=False)

# Use the model to predict the images class

preds = list(model.predict(input_fn))

# Display

for i in range(n_images):

plt.imshow(np.reshape(test_images[i], [28, 28]), cmap='gray')

plt.show()

print("Model prediction:", preds[i])

INFO:tensorflow:Restoring parameters from /tmp/tmpdhd6F4/model.ckpt-2000

Model prediction: 7

Model prediction: 2

Model prediction: 1

Model prediction: 0