10.variational_autoencoder

程序说明

时间:2016年11月17日

说明:该程序构造一个变分自动编码器。

Reference: "Auto-Encoding Variational Bayes"

数据集:MNIST

1.加载keras模块

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

from keras.layers import Input, Dense, Lambda

from keras.models import Model

from keras import backend as K

from keras import objectives

from keras.datasets import mnist

Using TensorFlow backend.

2.变量初始化

batch_size = 100

original_dim = 784

latent_dim = 2

intermediate_dim = 256

nb_epoch = 50

epsilon_std = 1.0

x = Input(batch_shape=(batch_size, original_dim))

h = Dense(intermediate_dim, activation='relu')(x)

z_mean = Dense(latent_dim)(h)

z_log_var = Dense(latent_dim)(h)

def sampling(args):

z_mean, z_log_var = args

epsilon = K.random_normal(shape=(batch_size, latent_dim), mean=0.,

std=epsilon_std)

return z_mean + K.exp(z_log_var / 2) * epsilon

# note that "output_shape" isn't necessary with the TensorFlow backend

z = Lambda(sampling, output_shape=(latent_dim,))([z_mean, z_log_var])

# we instantiate these layers separately so as to reuse them later

decoder_h = Dense(intermediate_dim, activation='relu')

decoder_mean = Dense(original_dim, activation='sigmoid')

h_decoded = decoder_h(z)

x_decoded_mean = decoder_mean(h_decoded)

def vae_loss(x, x_decoded_mean):

xent_loss = original_dim * objectives.binary_crossentropy(x, x_decoded_mean)

kl_loss = - 0.5 * K.sum(1 + z_log_var - K.square(z_mean) - K.exp(z_log_var), axis=-1)

return xent_loss + kl_loss

vae = Model(x, x_decoded_mean)

vae.compile(optimizer='rmsprop', loss=vae_loss)

# train the VAE on MNIST digits

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train = x_train.astype('float32') / 255.

x_test = x_test.astype('float32') / 255.

x_train = x_train.reshape((len(x_train), np.prod(x_train.shape[1:])))

x_test = x_test.reshape((len(x_test), np.prod(x_test.shape[1:])))

vae.fit(x_train, x_train,

shuffle=True,

nb_epoch=nb_epoch,

batch_size=batch_size,

validation_data=(x_test, x_test))

# build a model to project inputs on the latent space

encoder = Model(x, z_mean)

Train on 60000 samples, validate on 10000 samples

Epoch 1/50

60000/60000 [==============================] - 5s - loss: 191.0905 - val_loss: 173.5436

Epoch 2/50

60000/60000 [==============================] - 5s - loss: 171.7320 - val_loss: 169.0859

Epoch 3/50

60000/60000 [==============================] - 5s - loss: 168.1224 - val_loss: 166.7636

Epoch 4/50

60000/60000 [==============================] - 5s - loss: 165.5420 - val_loss: 164.4730

Epoch 5/50

60000/60000 [==============================] - 5s - loss: 163.8741 - val_loss: 163.4501

Epoch 6/50

60000/60000 [==============================] - 5s - loss: 162.7379 - val_loss: 162.9273

Epoch 7/50

60000/60000 [==============================] - 5s - loss: 161.8719 - val_loss: 161.7938

Epoch 8/50

60000/60000 [==============================] - 5s - loss: 161.0796 - val_loss: 161.3434

Epoch 9/50

60000/60000 [==============================] - 5s - loss: 160.4377 - val_loss: 160.4363

Epoch 10/50

60000/60000 [==============================] - 5s - loss: 159.8330 - val_loss: 159.9203

Epoch 11/50

60000/60000 [==============================] - 5s - loss: 159.2621 - val_loss: 159.5222

Epoch 12/50

60000/60000 [==============================] - 5s - loss: 158.7406 - val_loss: 158.9181

Epoch 13/50

60000/60000 [==============================] - 5s - loss: 158.2449 - val_loss: 158.6521

Epoch 14/50

60000/60000 [==============================] - 5s - loss: 157.7862 - val_loss: 158.1587

Epoch 15/50

60000/60000 [==============================] - 5s - loss: 157.3053 - val_loss: 157.8226

Epoch 16/50

60000/60000 [==============================] - 5s - loss: 156.9417 - val_loss: 158.0445

Epoch 17/50

60000/60000 [==============================] - 5s - loss: 156.5658 - val_loss: 157.3171

Epoch 18/50

60000/60000 [==============================] - 5s - loss: 156.2249 - val_loss: 157.0271

Epoch 19/50

60000/60000 [==============================] - 5s - loss: 155.8873 - val_loss: 157.0536

Epoch 20/50

60000/60000 [==============================] - 5s - loss: 155.5819 - val_loss: 156.3859

Epoch 21/50

60000/60000 [==============================] - 5s - loss: 155.2945 - val_loss: 156.1629

Epoch 22/50

60000/60000 [==============================] - 5s - loss: 155.0148 - val_loss: 155.9180

Epoch 23/50

60000/60000 [==============================] - 5s - loss: 154.7706 - val_loss: 155.9890

Epoch 24/50

60000/60000 [==============================] - 5s - loss: 154.5395 - val_loss: 155.7588

Epoch 25/50

60000/60000 [==============================] - 5s - loss: 154.3486 - val_loss: 155.7783

Epoch 26/50

60000/60000 [==============================] - 5s - loss: 154.1120 - val_loss: 155.4132

Epoch 27/50

60000/60000 [==============================] - 5s - loss: 153.9194 - val_loss: 155.1289

Epoch 28/50

60000/60000 [==============================] - 5s - loss: 153.6825 - val_loss: 155.4338

Epoch 29/50

60000/60000 [==============================] - 5s - loss: 153.5506 - val_loss: 155.0366

Epoch 30/50

60000/60000 [==============================] - 5s - loss: 153.3196 - val_loss: 154.7996

Epoch 31/50

60000/60000 [==============================] - 5s - loss: 153.1628 - val_loss: 154.6084

Epoch 32/50

60000/60000 [==============================] - 4s - loss: 152.9938 - val_loss: 155.1787

Epoch 33/50

60000/60000 [==============================] - 5s - loss: 152.8034 - val_loss: 154.5734

Epoch 34/50

60000/60000 [==============================] - 5s - loss: 152.6532 - val_loss: 154.3676

Epoch 35/50

60000/60000 [==============================] - 4s - loss: 152.4781 - val_loss: 154.4870

Epoch 36/50

60000/60000 [==============================] - 4s - loss: 152.3254 - val_loss: 154.2274

Epoch 37/50

60000/60000 [==============================] - 4s - loss: 152.1693 - val_loss: 154.5915

Epoch 38/50

60000/60000 [==============================] - 4s - loss: 151.9978 - val_loss: 154.0650

Epoch 39/50

60000/60000 [==============================] - 4s - loss: 151.8608 - val_loss: 153.7670

Epoch 40/50

60000/60000 [==============================] - 4s - loss: 151.7393 - val_loss: 153.9039

Epoch 41/50

60000/60000 [==============================] - 4s - loss: 151.5966 - val_loss: 154.7774

Epoch 42/50

60000/60000 [==============================] - 5s - loss: 151.4757 - val_loss: 153.7938

Epoch 43/50

60000/60000 [==============================] - 5s - loss: 151.3705 - val_loss: 153.8829

Epoch 44/50

60000/60000 [==============================] - 5s - loss: 151.2328 - val_loss: 153.7716

Epoch 45/50

60000/60000 [==============================] - 4s - loss: 151.1133 - val_loss: 153.3561

Epoch 46/50

60000/60000 [==============================] - 4s - loss: 151.0194 - val_loss: 153.4168

Epoch 47/50

60000/60000 [==============================] - 4s - loss: 150.8907 - val_loss: 153.4800

Epoch 48/50

60000/60000 [==============================] - 4s - loss: 150.7891 - val_loss: 153.5535

Epoch 49/50

60000/60000 [==============================] - 4s - loss: 150.6946 - val_loss: 153.8616

Epoch 50/50

60000/60000 [==============================] - 4s - loss: 150.5713 - val_loss: 153.4389

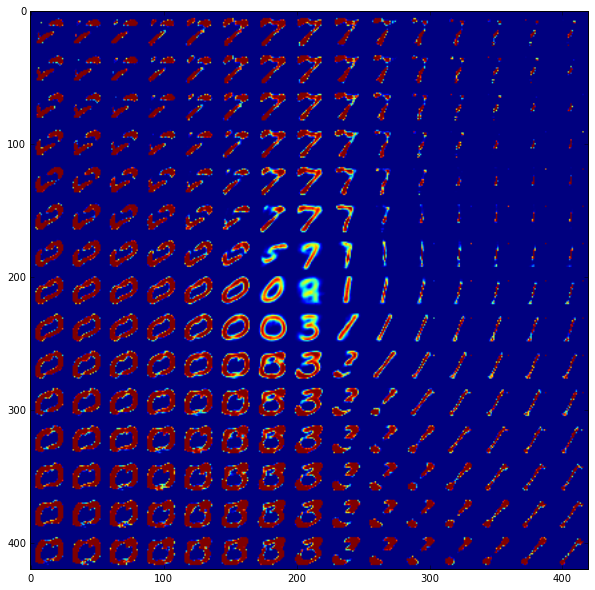

# display a 2D plot of the digit classes in the latent space

x_test_encoded = encoder.predict(x_test, batch_size=batch_size)

plt.figure(figsize=(6, 6))

plt.scatter(x_test_encoded[:, 0], x_test_encoded[:, 1], c=y_test)

plt.colorbar()

plt.show()

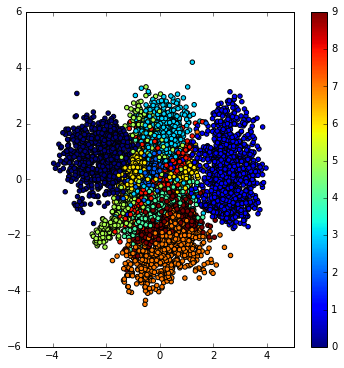

# build a digit generator that can sample from the learned distribution

decoder_input = Input(shape=(latent_dim,))

_h_decoded = decoder_h(decoder_input)

_x_decoded_mean = decoder_mean(_h_decoded)

generator = Model(decoder_input, _x_decoded_mean)

# display a 2D manifold of the digits

n = 15 # figure with 15x15 digits

digit_size = 28

figure = np.zeros((digit_size * n, digit_size * n))

# we will sample n points within [-15, 15] standard deviations

grid_x = np.linspace(-15, 15, n)

grid_y = np.linspace(-15, 15, n)

for i, yi in enumerate(grid_x):

for j, xi in enumerate(grid_y):

z_sample = np.array([[xi, yi]])

x_decoded = generator.predict(z_sample)

digit = x_decoded[0].reshape(digit_size, digit_size)

figure[i * digit_size: (i + 1) * digit_size,

j * digit_size: (j + 1) * digit_size] = digit

plt.figure(figsize=(10, 10))

plt.imshow(figure)

plt.show()