"""Simple tutorial for using TensorFlow to compute a linear regression.

Parag K. Mital, Jan. 2016"""

'Simple tutorial for using TensorFlow to compute a linear regression.\n\nParag K. Mital, Jan. 2016'

%matplotlib inline

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

plt.ion()

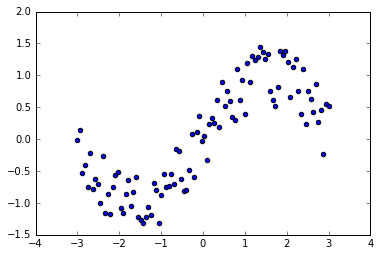

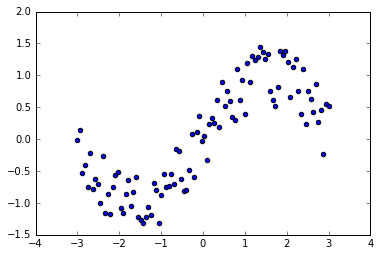

n_observations = 100

fig, ax = plt.subplots(1, 1)

xs = np.linspace(-3, 3, n_observations)

ys = np.sin(xs) + np.random.uniform(-0.5, 0.5, n_observations)

ax.scatter(xs, ys)

fig.show()

plt.draw()

/home/heythisischo/anaconda2/lib/python2.7/site-packages/matplotlib/figure.py:397: UserWarning: matplotlib is currently using a non-GUI backend, so cannot show the figure

"matplotlib is currently using a non-GUI backend, "

X = tf.placeholder(tf.float32)

Y = tf.placeholder(tf.float32)

W = tf.Variable(tf.random_normal([1]), name='weight')

b = tf.Variable(tf.random_normal([1]), name='bias')

Y_pred = tf.add(tf.mul(X, W), b)

cost = tf.reduce_sum(tf.pow(Y_pred - Y, 2)) / (n_observations - 1)

learning_rate = 0.01

optimizer = tf.train.GradientDescentOptimizer(learning_rate).minimize(cost)

n_epochs = 1000

with tf.Session() as sess:

sess.run(tf.initialize_all_variables())

prev_training_cost = 0.0

for epoch_i in range(n_epochs):

for (x, y) in zip(xs, ys):

sess.run(optimizer, feed_dict={X: x, Y: y})

training_cost = sess.run(

cost, feed_dict={X: xs, Y: ys})

print(training_cost)

if epoch_i % 20 == 0:

ax.plot(xs, Y_pred.eval(

feed_dict={X: xs}, session=sess),

'k', alpha=epoch_i / n_epochs)

fig.show()

plt.draw()

if np.abs(prev_training_cost - training_cost) < 0.000001:

break

prev_training_cost = training_cost

fig.show()

1.43989

1.30686

1.18916

1.08503

0.992879

0.911325

0.839141

0.77524

0.718663

0.668564

0.624192

0.584885

0.550058

0.519194

0.491835

0.467578

0.446063

0.426977

0.410039

0.395001

0.381647

0.369783

0.359238

0.349861

0.341519

0.334094

0.327481

0.321587

0.316332

0.311643

0.307455

0.303713

0.300366

0.29737

0.294686

0.292278

0.290117

0.288175

0.286427

0.284853

0.283433

0.282151

0.280991

0.279942

0.27899

0.278125

0.277339

0.276623

0.27597

0.275373

0.274826

0.274325

0.273865

0.273442

0.273052

0.272693

0.27236

0.272052

0.271767

0.271501

0.271254

0.271024

0.27081

0.270609

0.270421

0.270245

0.27008

0.269925

0.269779

0.269642

0.269512

0.26939

0.269274

0.269165

0.269062

0.268964

0.268871

0.268783

0.268699

0.26862

0.268544

0.268472

0.268404

0.268339

0.268277

0.268218

0.268161

0.268107

0.268056

0.268007

0.26796

0.267916

0.267873

0.267832

0.267794

0.267756

0.267721

0.267687

0.267654

0.267623

0.267594

0.267565

0.267538

0.267512

0.267487

0.267464

0.267441

0.267419

0.267398

0.267378

0.267359

0.267341

0.267324

0.267307

0.267291

0.267276

0.267261

0.267247

0.267234

0.267221

0.267209

0.267197

0.267186

0.267176

0.267165

0.267156

0.267146

0.267137

0.267129

0.267121

0.267113

0.267105

0.267098

0.267092

0.267085

0.267079

0.267073

0.267067

0.267062

0.267057

0.267052

0.267047

0.267043

0.267039

0.267034

0.267031

0.267027

0.267023

0.26702

0.267017

0.267014

0.267011

0.267008

0.267006

0.267003

0.267001

0.266998

0.266996

0.266994

0.266992

0.26699

0.266989

0.266987

0.266985

0.266984

0.266982

0.266981

0.26698

0.266979

0.266978

0.266976

0.266975

0.266974

<matplotlib.figure.Figure at 0x7f8c0037e890>