7.1 RNN in TensorFlow - TimeSeries Data

import math

import os

import numpy as np

np.random.seed(123)

print("NumPy:{}".format(np.__version__))

import pandas as pd

print("Pandas:{}".format(pd.__version__))

import sklearn as sk

from sklearn import preprocessing as skpp

print("sklearn:{}".format(sk.__version__))

import matplotlib as mpl

import matplotlib.pyplot as plt

mpl.rcParams.update({'font.size': 20,

'figure.figsize': [15,10]

})

print("Matplotlib:{}".format(mpl.__version__))

import tensorflow as tf

tf.set_random_seed(123)

print("TensorFlow:{}".format(tf.__version__))

NumPy:1.13.1

Pandas:0.21.0

sklearn:0.19.1

Matplotlib:2.1.0

TensorFlow:1.4.1

DATASETSLIB_HOME = '../datasetslib'

import sys

if not DATASETSLIB_HOME in sys.path:

sys.path.append(DATASETSLIB_HOME)

%reload_ext autoreload

%autoreload 2

import datasetslib

from datasetslib import util as dsu

datasetslib.datasets_root = os.path.join(os.path.expanduser('~'),'datasets')

Read and pre-process the dataset

filepath = os.path.join(datasetslib.datasets_root,

'ts-data',

'international-airline-passengers-cleaned.csv'

)

dataframe = pd.read_csv(filepath,

usecols=[1],

header=0)

dataset = dataframe.values

dataset = dataset.astype(np.float32)

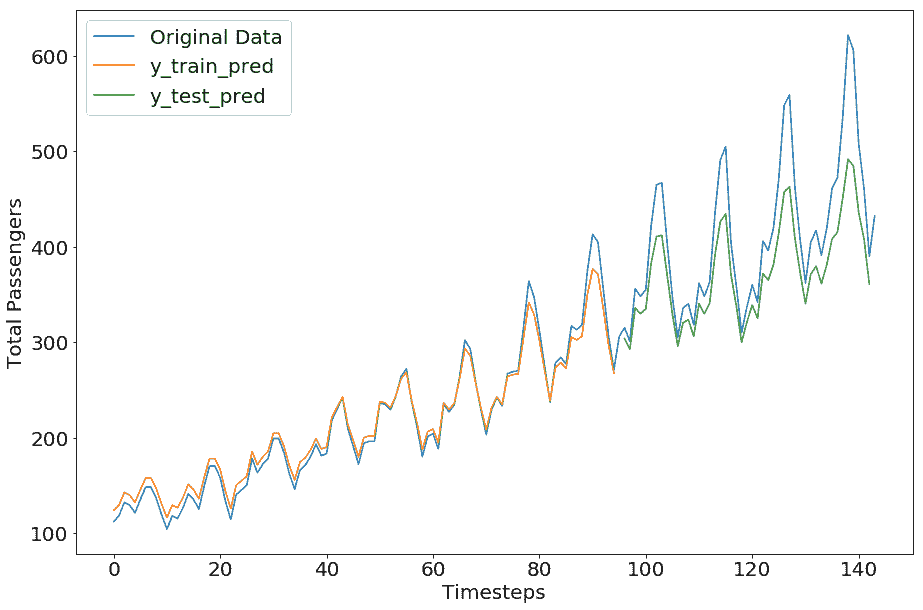

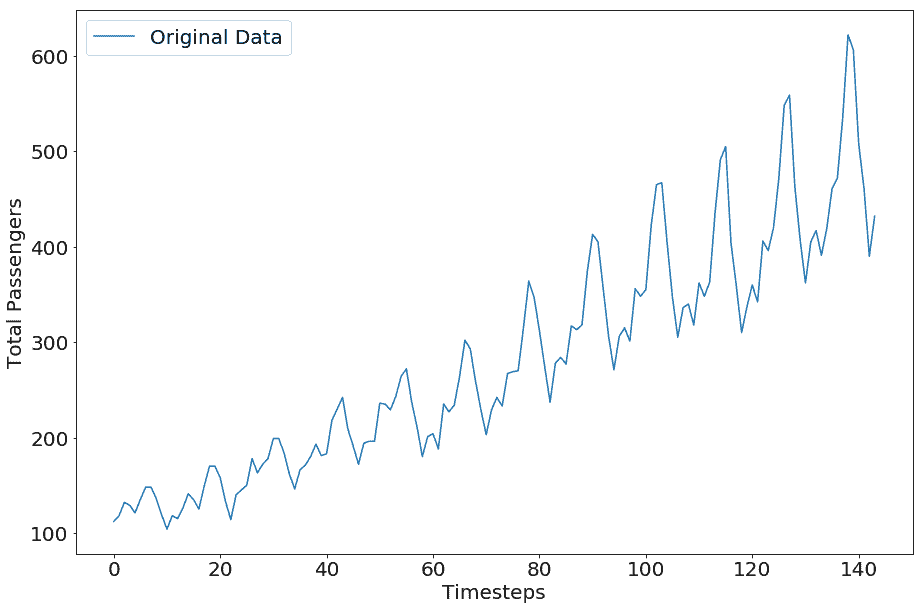

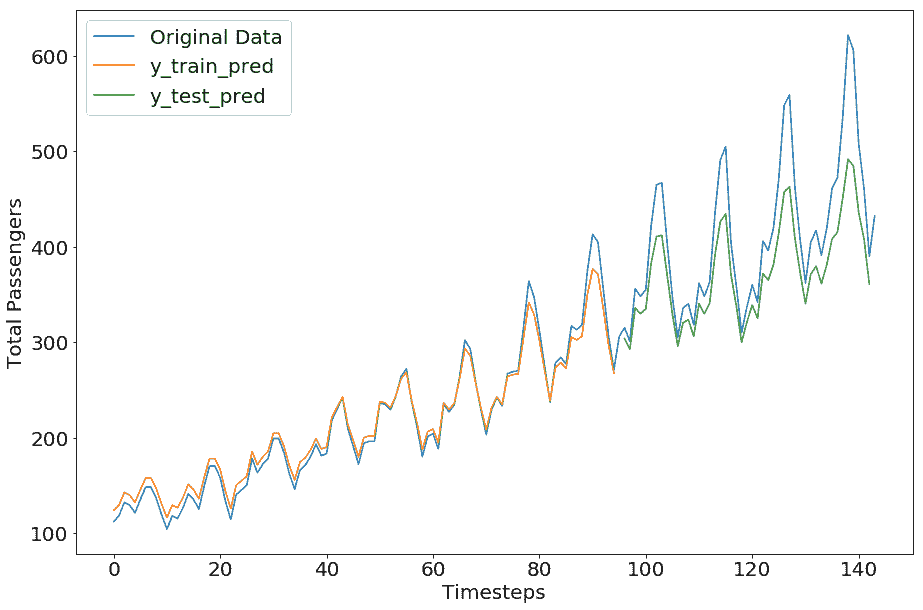

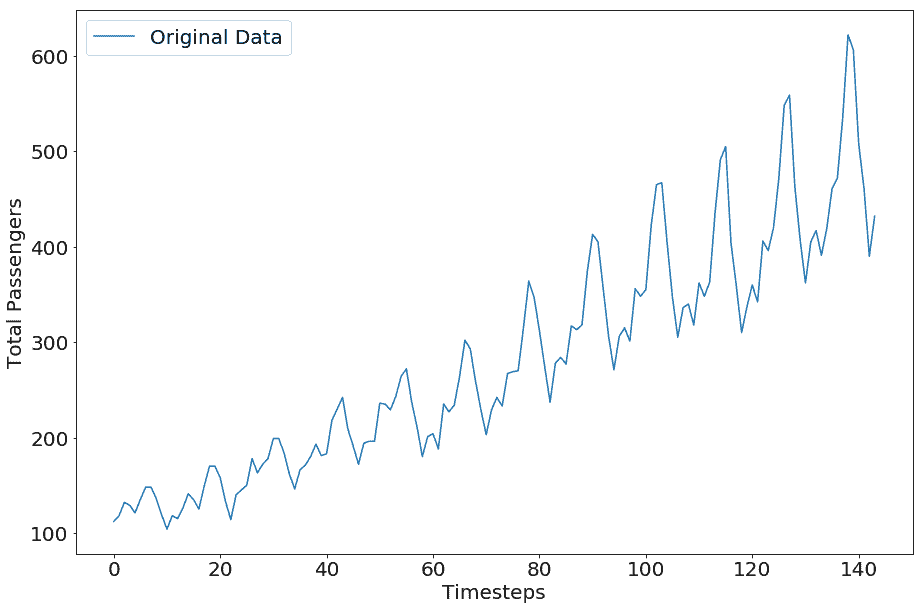

plt.plot(dataset,label='Original Data')

plt.legend()

plt.xlabel('Timesteps')

plt.ylabel('Total Passengers')

plt.show()

scaler = skpp.MinMaxScaler(feature_range=(0, 1))

normalized_dataset = scaler.fit_transform(dataset)

train,test=dsu.train_test_split(normalized_dataset,train_size=0.67)

n_x=1

n_y=1

X_train, Y_train, X_test, Y_test = dsu.mvts_to_xy(train,test,n_x=n_x,n_y=n_y)

TensorFlow SimpleRNN for TimeSeries Data

state_size = 4

n_epochs = 100

n_timesteps = n_x

n_x_vars = 1

n_y_vars = 1

learning_rate = 0.1

tf.reset_default_graph()

X_p = tf.placeholder(tf.float32, [None, n_timesteps, n_x_vars], name='X_p')

Y_p = tf.placeholder(tf.float32, [None, n_timesteps, n_y_vars], name='Y_p')

rnn_inputs = tf.unstack(X_p,axis=1)

cell = tf.nn.rnn_cell.BasicRNNCell(state_size)

rnn_outputs, final_state = tf.nn.static_rnn(cell,

rnn_inputs,

dtype=tf.float32

)

W = tf.get_variable('W', [state_size, n_y_vars])

b = tf.get_variable('b', [n_y_vars], initializer=tf.constant_initializer(0.0))

predictions = [tf.matmul(rnn_output, W) + b for rnn_output in rnn_outputs]

print(predictions)

[<tf.Tensor 'add:0' shape=(?, 1) dtype=float32>]

y_as_list = tf.unstack(Y_p, num=n_timesteps, axis=1)

print(y_as_list)

[<tf.Tensor 'unstack_1:0' shape=(?, 1) dtype=float32>]

mse = tf.losses.mean_squared_error

losses = [mse(labels=label, predictions=prediction)

for prediction, label in zip(predictions, y_as_list)

]

total_loss = tf.reduce_mean(losses)

optimizer = tf.train.AdagradOptimizer(learning_rate).minimize(total_loss)

with tf.Session() as tfs:

tfs.run(tf.global_variables_initializer())

epoch_loss = 0.0

for epoch in range(n_epochs):

feed_dict={X_p: X_train.reshape(-1, n_timesteps,

n_x_vars),

Y_p: Y_train.reshape(-1, n_timesteps,

n_x_vars)

}

epoch_loss,y_train_pred,_=tfs.run([total_loss,predictions,optimizer],

feed_dict=feed_dict

)

print("train mse = {}".format(epoch_loss))

feed_dict={X_p: X_test.reshape(-1, n_timesteps,

n_x_vars),

Y_p: Y_test.reshape(-1, n_timesteps,

n_y_vars)

}

test_loss, y_test_pred = tfs.run([total_loss,predictions],

feed_dict=feed_dict

)

print('test mse = {}'.format(test_loss))

print('test rmse = {}'.format(math.sqrt(test_loss)))

train mse = 0.002138530369848013

test mse = 0.014468207024037838

test rmse = 0.12028386019760855

y_train_pred=y_train_pred[0]

y_test_pred=y_test_pred[0]

y_train_pred = scaler.inverse_transform(y_train_pred)

y_test_pred = scaler.inverse_transform(y_test_pred)

y_train_orig = scaler.inverse_transform(Y_train)

y_test_orig = scaler.inverse_transform(Y_test)

trainPredictPlot = np.empty_like(dataset)

trainPredictPlot[:, :] = np.nan

trainPredictPlot[n_x-1:len(y_train_pred)+n_x-1, :] = y_train_pred

testPredictPlot = np.empty_like(dataset)

testPredictPlot[:, :] = np.nan

testPredictPlot[len(y_train_pred)+(n_x*2)-1:len(dataset)-1, :] = y_test_pred

plt.plot(dataset,label='Original Data')

plt.plot(trainPredictPlot,label='y_train_pred')

plt.plot(testPredictPlot,label='y_test_pred')

plt.legend()

plt.xlabel('Timesteps')

plt.ylabel('Total Passengers')

plt.show()

TensorFlow LSTM for TimeSeries Data

n_epochs = 600

n_timesteps = n_x

n_x_vars = 1

n_y_vars = 1

learning_rate = 0.1

tf.reset_default_graph()

X_p = tf.placeholder(tf.float32, [None, n_timesteps, n_x_vars], name='X_p')

Y_p = tf.placeholder(tf.float32, [None, n_timesteps, n_y_vars], name='Y_p')

rnn_inputs = tf.unstack(X_p,axis=1)

cell = tf.nn.rnn_cell.LSTMCell(state_size)

rnn_outputs, final_state = tf.nn.static_rnn(cell, rnn_inputs,dtype=tf.float32)

W = tf.get_variable('W', [state_size, n_y_vars])

b = tf.get_variable('b', [n_y_vars], initializer=tf.constant_initializer(0.0))

predictions = [tf.matmul(rnn_output, W) + b for rnn_output in rnn_outputs]

y_as_list = tf.unstack(Y_p, num=n_timesteps, axis=1)

mse = tf.losses.mean_squared_error

losses = [mse(labels=label, predictions=prediction) for

prediction, label in zip(predictions, y_as_list)

]

total_loss = tf.reduce_mean(losses)

optimizer = tf.train.AdagradOptimizer(learning_rate).minimize(total_loss)

with tf.Session() as tfs:

tfs.run(tf.global_variables_initializer())

epoch_loss = 0.0

for epoch in range(n_epochs):

feed_dict={X_p: X_train.reshape(-1, n_timesteps,

n_x_vars),

Y_p: Y_train.reshape(-1, n_timesteps,

n_x_vars)

}

epoch_loss,y_train_pred,_=tfs.run([total_loss,

predictions,

optimizer],

feed_dict=feed_dict

)

print("train mse = {}".format(epoch_loss))

feed_dict={X_p: X_test.reshape(-1, n_timesteps,

n_x_vars),

Y_p: Y_test.reshape(-1, n_timesteps,

n_y_vars)

}

test_loss, y_test_pred = tfs.run([total_loss,predictions],

feed_dict=feed_dict)

print('test mse = {}'.format(test_loss))

print('test rmse = {}'.format(math.sqrt(test_loss)))

y_train_pred=y_train_pred[0]

y_test_pred=y_test_pred[0]

y_train_pred = scaler.inverse_transform(y_train_pred)

y_test_pred = scaler.inverse_transform(y_test_pred)

y_train_orig = scaler.inverse_transform(Y_train)

y_test_orig = scaler.inverse_transform(Y_test)

trainPredictPlot = np.empty_like(dataset)

trainPredictPlot[:, :] = np.nan

trainPredictPlot[n_x-1:len(y_train_pred)+n_x-1, :] = y_train_pred

testPredictPlot = np.empty_like(dataset)

testPredictPlot[:, :] = np.nan

testPredictPlot[len(y_train_pred)+(n_x*2)-1:len(dataset)-1, :]=y_test_pred

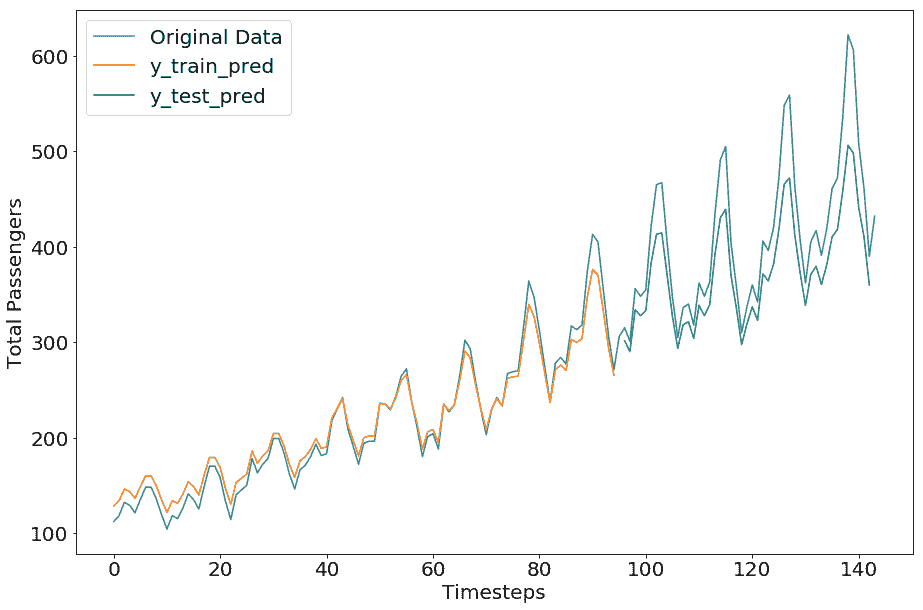

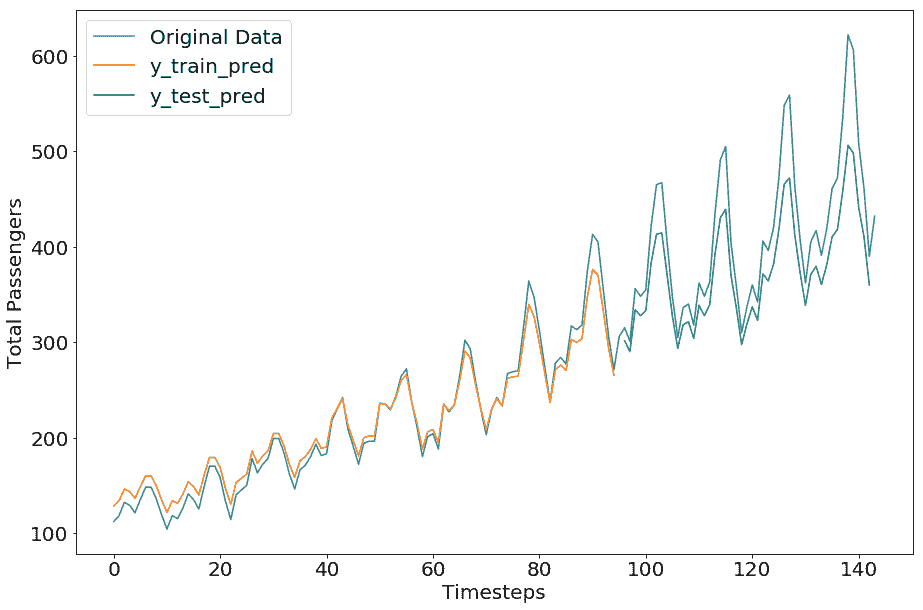

plt.plot(dataset,label='Original Data')

plt.plot(trainPredictPlot,label='y_train_pred')

plt.plot(testPredictPlot,label='y_test_pred')

plt.legend()

plt.xlabel('Timesteps')

plt.ylabel('Total Passengers')

plt.show()

train mse = 0.0019662242848426104

test mse = 0.012980078347027302

test rmse = 0.11393014678752636

TensorFlow GRU for TimeSeries Data

state_size = 4

n_epochs = 600

n_timesteps = n_x

n_x_vars = 1

n_y_vars = 1

learning_rate = 0.1

tf.reset_default_graph()

X_p = tf.placeholder(tf.float32, [None, n_timesteps, n_x_vars], name='X_p')

Y_p = tf.placeholder(tf.float32, [None, n_timesteps, n_y_vars], name='Y_p')

rnn_inputs = tf.unstack(X_p,axis=1)

cell = tf.nn.rnn_cell.GRUCell(state_size)

rnn_outputs, final_state = tf.nn.static_rnn(cell, rnn_inputs,dtype=tf.float32)

W = tf.get_variable('W', [state_size, n_y_vars])

b = tf.get_variable('b', [n_y_vars], initializer=tf.constant_initializer(0.0))

predictions = [tf.matmul(rnn_output, W) + b for rnn_output in rnn_outputs]

y_as_list = tf.unstack(Y_p, num=n_timesteps, axis=1)

mse = tf.losses.mean_squared_error

losses = [mse(labels=label, predictions=prediction) for

prediction, label in zip(predictions, y_as_list)

]

total_loss = tf.reduce_mean(losses)

optimizer = tf.train.AdagradOptimizer(learning_rate).minimize(total_loss)

with tf.Session() as tfs:

tfs.run(tf.global_variables_initializer())

epoch_loss = 0.0

for epoch in range(n_epochs):

feed_dict={X_p: X_train.reshape(-1,

n_timesteps,

n_x_vars

),

Y_p: Y_train.reshape(-1,

n_timesteps,

n_y_vars

)

}

epoch_loss,y_train_pred,_ = tfs.run([total_loss,

predictions,

optimizer],

feed_dict=feed_dict

)

print("train mse = {}".format(epoch_loss))

feed_dict={X_p: X_test.reshape(-1,

n_timesteps,

n_x_vars

),

Y_p: Y_test.reshape(-1,

n_timesteps,

n_y_vars

)

}

test_loss, y_test_pred = tfs.run([total_loss,predictions],

feed_dict=feed_dict)

print('test mse = {}'.format(test_loss))

print('test rmse = {}'.format(math.sqrt(test_loss)))

y_train_pred=y_train_pred[0]

y_test_pred=y_test_pred[0]

y_train_pred = scaler.inverse_transform(y_train_pred)

y_test_pred = scaler.inverse_transform(y_test_pred)

y_train_orig = scaler.inverse_transform(Y_train)

y_test_orig = scaler.inverse_transform(Y_test)

trainPredictPlot = np.empty_like(dataset)

trainPredictPlot[:, :] = np.nan

trainPredictPlot[n_x-1:len(y_train_pred)+n_x-1, :] = y_train_pred

testPredictPlot = np.empty_like(dataset)

testPredictPlot[:, :] = np.nan

testPredictPlot[len(y_train_pred)+(n_x*2)-1:len(dataset)-1, :]=y_test_pred

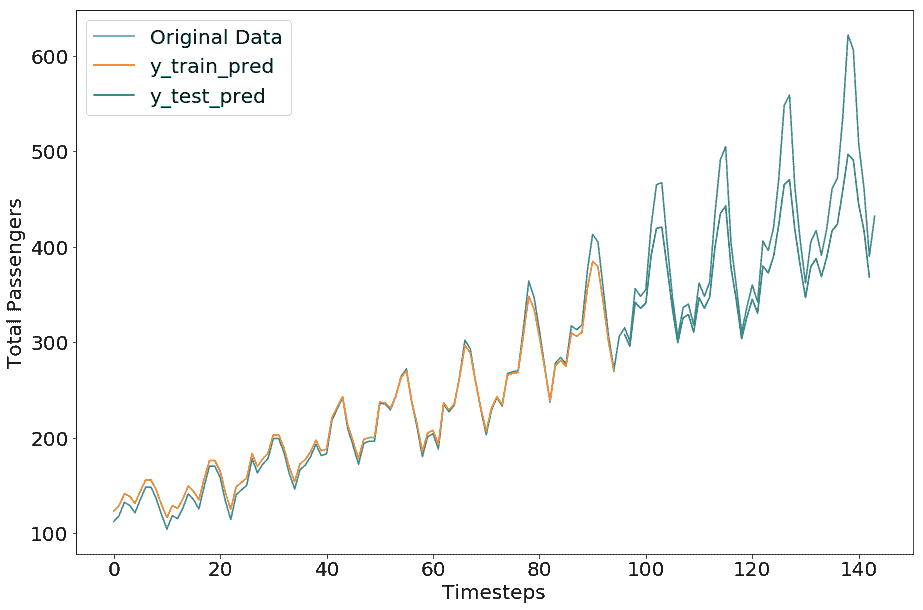

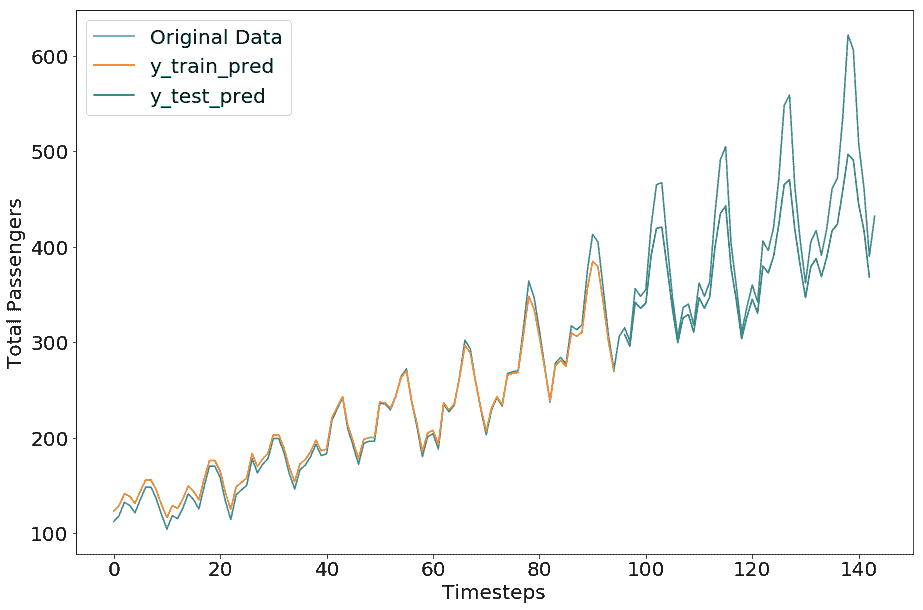

plt.plot(dataset,label='Original Data')

plt.plot(trainPredictPlot,label='y_train_pred')

plt.plot(testPredictPlot,label='y_test_pred')

plt.legend()

plt.xlabel('Timesteps')

plt.ylabel('Total Passengers')

plt.show()

train mse = 0.002038003643974662

test mse = 0.015027225948870182

test rmse = 0.12258558621987407