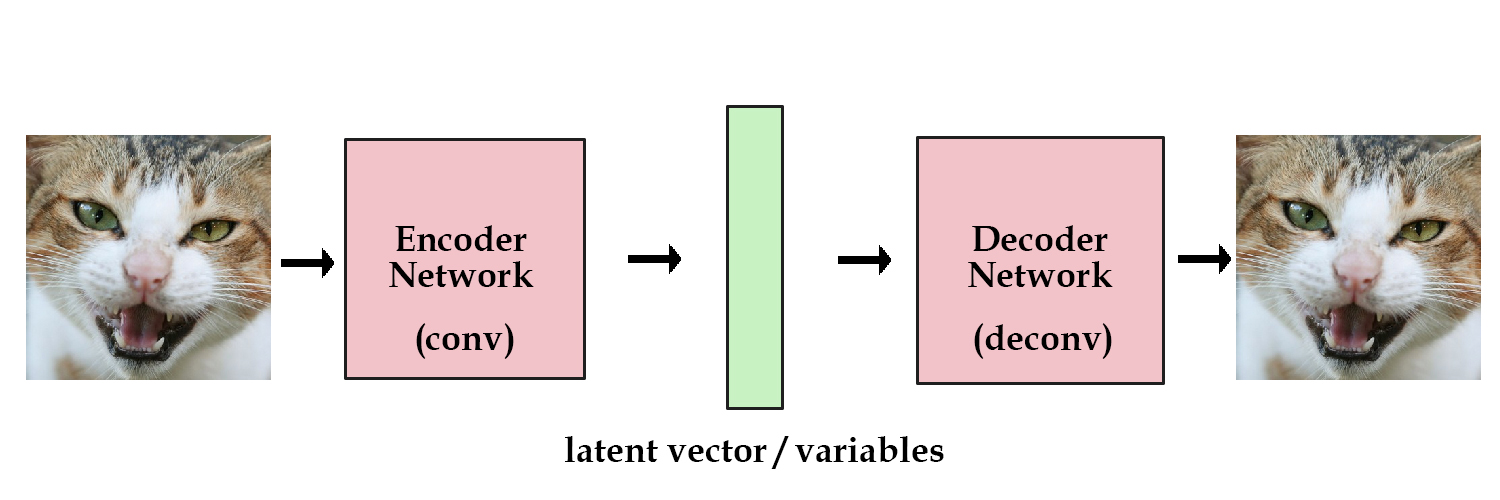

Auto-Encoder Example

Build a 2 layers auto-encoder with TensorFlow to compress images to a lower latent space and then reconstruct them.

- Author: Aymeric Damien

- Project: https://github.com/aymericdamien/TensorFlow-Examples/

Auto-Encoder Overview

References:

- Gradient-based learning applied to document recognition. Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner. Proceedings of the IEEE, 86(11):2278-2324, November 1998.

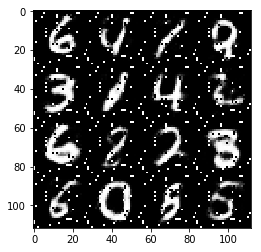

MNIST Dataset Overview

This example is using MNIST handwritten digits. The dataset contains 60,000 examples for training and 10,000 examples for testing. The digits have been size-normalized and centered in a fixed-size image (28x28 pixels) with values from 0 to 1. For simplicity, each image has been flattened and converted to a 1-D numpy array of 784 features (28*28).

More info: http://yann.lecun.com/exdb/mnist/

from __future__ import division, print_function, absolute_import

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

# Import MNIST data

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets("/tmp/data/", one_hot=True)

Extracting /tmp/data/train-images-idx3-ubyte.gz

Extracting /tmp/data/train-labels-idx1-ubyte.gz

Extracting /tmp/data/t10k-images-idx3-ubyte.gz

Extracting /tmp/data/t10k-labels-idx1-ubyte.gz

# Training Parameters

learning_rate = 0.01

num_steps = 30000

batch_size = 256

display_step = 1000

examples_to_show = 10

# Network Parameters

num_hidden_1 = 256 # 1st layer num features

num_hidden_2 = 128 # 2nd layer num features (the latent dim)

num_input = 784 # MNIST data input (img shape: 28*28)

# tf Graph input (only pictures)

X = tf.placeholder("float", [None, num_input])

weights = {

'encoder_h1': tf.Variable(tf.random_normal([num_input, num_hidden_1])),

'encoder_h2': tf.Variable(tf.random_normal([num_hidden_1, num_hidden_2])),

'decoder_h1': tf.Variable(tf.random_normal([num_hidden_2, num_hidden_1])),

'decoder_h2': tf.Variable(tf.random_normal([num_hidden_1, num_input])),

}

biases = {

'encoder_b1': tf.Variable(tf.random_normal([num_hidden_1])),

'encoder_b2': tf.Variable(tf.random_normal([num_hidden_2])),

'decoder_b1': tf.Variable(tf.random_normal([num_hidden_1])),

'decoder_b2': tf.Variable(tf.random_normal([num_input])),

}

# Building the encoder

def encoder(x):

# Encoder Hidden layer with sigmoid activation #1

layer_1 = tf.nn.sigmoid(tf.add(tf.matmul(x, weights['encoder_h1']),

biases['encoder_b1']))

# Encoder Hidden layer with sigmoid activation #2

layer_2 = tf.nn.sigmoid(tf.add(tf.matmul(layer_1, weights['encoder_h2']),

biases['encoder_b2']))

return layer_2

# Building the decoder

def decoder(x):

# Decoder Hidden layer with sigmoid activation #1

layer_1 = tf.nn.sigmoid(tf.add(tf.matmul(x, weights['decoder_h1']),

biases['decoder_b1']))

# Decoder Hidden layer with sigmoid activation #2

layer_2 = tf.nn.sigmoid(tf.add(tf.matmul(layer_1, weights['decoder_h2']),

biases['decoder_b2']))

return layer_2

# Construct model

encoder_op = encoder(X)

decoder_op = decoder(encoder_op)

# Prediction

y_pred = decoder_op

# Targets (Labels) are the input data.

y_true = X

# Define loss and optimizer, minimize the squared error

loss = tf.reduce_mean(tf.pow(y_true - y_pred, 2))

optimizer = tf.train.RMSPropOptimizer(learning_rate).minimize(loss)

# Initialize the variables (i.e. assign their default value)

init = tf.global_variables_initializer()

# Start Training

# Start a new TF session

sess = tf.Session()

# Run the initializer

sess.run(init)

# Training

for i in range(1, num_steps+1):

# Prepare Data

# Get the next batch of MNIST data (only images are needed, not labels)

batch_x, _ = mnist.train.next_batch(batch_size)

# Run optimization op (backprop) and cost op (to get loss value)

_, l = sess.run([optimizer, loss], feed_dict={X: batch_x})

# Display logs per step

if i % display_step == 0 or i == 1:

print('Step %i: Minibatch Loss: %f' % (i, l))

Step 1: Minibatch Loss: 0.438300

Step 1000: Minibatch Loss: 0.146586

Step 2000: Minibatch Loss: 0.130722

Step 3000: Minibatch Loss: 0.117178

Step 4000: Minibatch Loss: 0.109027

Step 5000: Minibatch Loss: 0.102582

Step 6000: Minibatch Loss: 0.099183

Step 7000: Minibatch Loss: 0.095619

Step 8000: Minibatch Loss: 0.089006

Step 9000: Minibatch Loss: 0.087125

Step 10000: Minibatch Loss: 0.083930

Step 11000: Minibatch Loss: 0.077512

Step 12000: Minibatch Loss: 0.077137

Step 13000: Minibatch Loss: 0.073983

Step 14000: Minibatch Loss: 0.074218

Step 15000: Minibatch Loss: 0.074492

Step 16000: Minibatch Loss: 0.074374

Step 17000: Minibatch Loss: 0.070909

Step 18000: Minibatch Loss: 0.069438

Step 19000: Minibatch Loss: 0.068245

Step 20000: Minibatch Loss: 0.068402

Step 21000: Minibatch Loss: 0.067113

Step 22000: Minibatch Loss: 0.068241

Step 23000: Minibatch Loss: 0.062454

Step 24000: Minibatch Loss: 0.059754

Step 25000: Minibatch Loss: 0.058687

Step 26000: Minibatch Loss: 0.059107

Step 27000: Minibatch Loss: 0.055788

Step 28000: Minibatch Loss: 0.057263

Step 29000: Minibatch Loss: 0.056391

Step 30000: Minibatch Loss: 0.057672

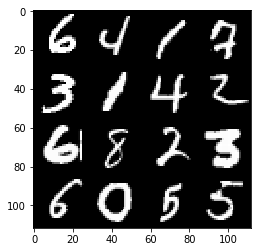

# Testing

# Encode and decode images from test set and visualize their reconstruction.

n = 4

canvas_orig = np.empty((28 * n, 28 * n))

canvas_recon = np.empty((28 * n, 28 * n))

for i in range(n):

# MNIST test set

batch_x, _ = mnist.test.next_batch(n)

# Encode and decode the digit image

g = sess.run(decoder_op, feed_dict={X: batch_x})

# Display original images

for j in range(n):

# Draw the generated digits

canvas_orig[i * 28:(i + 1) * 28, j * 28:(j + 1) * 28] = batch_x[j].reshape([28, 28])

# Display reconstructed images

for j in range(n):

# Draw the generated digits

canvas_recon[i * 28:(i + 1) * 28, j * 28:(j + 1) * 28] = g[j].reshape([28, 28])

print("Original Images")

plt.figure(figsize=(n, n))

plt.imshow(canvas_orig, origin="upper", cmap="gray")

plt.show()

print("Reconstructed Images")

plt.figure(figsize=(n, n))

plt.imshow(canvas_recon, origin="upper", cmap="gray")

plt.show()

Original Images

Reconstructed Images