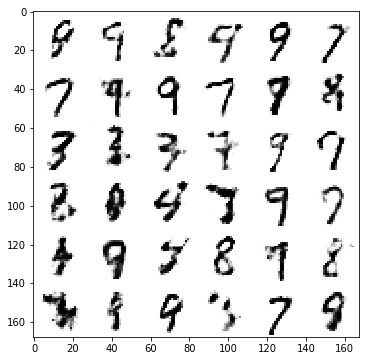

Generative Adversarial Network Example

Build a generative adversarial network (GAN) to generate digit images from a noise distribution with TensorFlow.

- Author: Aymeric Damien

- Project: https://github.com/aymericdamien/TensorFlow-Examples/

GAN Overview

References:

- Generative adversarial nets. I Goodfellow, J Pouget-Abadie, M Mirza, B Xu, D Warde-Farley, S Ozair, Y. Bengio. Advances in neural information processing systems, 2672-2680.

- Understanding the difficulty of training deep feedforward neural networks. X Glorot, Y Bengio. Aistats 9, 249-256

Other tutorials:

- Generative Adversarial Networks Explained. Kevin Frans.

MNIST Dataset Overview

This example is using MNIST handwritten digits. The dataset contains 60,000 examples for training and 10,000 examples for testing. The digits have been size-normalized and centered in a fixed-size image (28x28 pixels) with values from 0 to 1. For simplicity, each image has been flattened and converted to a 1-D numpy array of 784 features (28*28).

More info: http://yann.lecun.com/exdb/mnist/

from __future__ import division, print_function, absolute_import

import matplotlib.pyplot as plt

import numpy as np

import tensorflow as tf

# Import MNIST data

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets("/tmp/data/", one_hot=True)

Extracting /tmp/data/train-images-idx3-ubyte.gz

Extracting /tmp/data/train-labels-idx1-ubyte.gz

Extracting /tmp/data/t10k-images-idx3-ubyte.gz

Extracting /tmp/data/t10k-labels-idx1-ubyte.gz

# Training Params

num_steps = 70000

batch_size = 128

learning_rate = 0.0002

# Network Params

image_dim = 784 # 28*28 pixels

gen_hidden_dim = 256

disc_hidden_dim = 256

noise_dim = 100 # Noise data points

# A custom initialization (see Xavier Glorot init)

def glorot_init(shape):

return tf.random_normal(shape=shape, stddev=1. / tf.sqrt(shape[0] / 2.))

# Store layers weight & bias

weights = {

'gen_hidden1': tf.Variable(glorot_init([noise_dim, gen_hidden_dim])),

'gen_out': tf.Variable(glorot_init([gen_hidden_dim, image_dim])),

'disc_hidden1': tf.Variable(glorot_init([image_dim, disc_hidden_dim])),

'disc_out': tf.Variable(glorot_init([disc_hidden_dim, 1])),

}

biases = {

'gen_hidden1': tf.Variable(tf.zeros([gen_hidden_dim])),

'gen_out': tf.Variable(tf.zeros([image_dim])),

'disc_hidden1': tf.Variable(tf.zeros([disc_hidden_dim])),

'disc_out': tf.Variable(tf.zeros([1])),

}

# Generator

def generator(x):

hidden_layer = tf.matmul(x, weights['gen_hidden1'])

hidden_layer = tf.add(hidden_layer, biases['gen_hidden1'])

hidden_layer = tf.nn.relu(hidden_layer)

out_layer = tf.matmul(hidden_layer, weights['gen_out'])

out_layer = tf.add(out_layer, biases['gen_out'])

out_layer = tf.nn.sigmoid(out_layer)

return out_layer

# Discriminator

def discriminator(x):

hidden_layer = tf.matmul(x, weights['disc_hidden1'])

hidden_layer = tf.add(hidden_layer, biases['disc_hidden1'])

hidden_layer = tf.nn.relu(hidden_layer)

out_layer = tf.matmul(hidden_layer, weights['disc_out'])

out_layer = tf.add(out_layer, biases['disc_out'])

out_layer = tf.nn.sigmoid(out_layer)

return out_layer

# Build Networks

# Network Inputs

gen_input = tf.placeholder(tf.float32, shape=[None, noise_dim], name='input_noise')

disc_input = tf.placeholder(tf.float32, shape=[None, image_dim], name='disc_input')

# Build Generator Network

gen_sample = generator(gen_input)

# Build 2 Discriminator Networks (one from noise input, one from generated samples)

disc_real = discriminator(disc_input)

disc_fake = discriminator(gen_sample)

# Build Loss

gen_loss = -tf.reduce_mean(tf.log(disc_fake))

disc_loss = -tf.reduce_mean(tf.log(disc_real) + tf.log(1. - disc_fake))

# Build Optimizers

optimizer_gen = tf.train.AdamOptimizer(learning_rate=learning_rate)

optimizer_disc = tf.train.AdamOptimizer(learning_rate=learning_rate)

# Training Variables for each optimizer

# By default in TensorFlow, all variables are updated by each optimizer, so we

# need to precise for each one of them the specific variables to update.

# Generator Network Variables

gen_vars = [weights['gen_hidden1'], weights['gen_out'],

biases['gen_hidden1'], biases['gen_out']]

# Discriminator Network Variables

disc_vars = [weights['disc_hidden1'], weights['disc_out'],

biases['disc_hidden1'], biases['disc_out']]

# Create training operations

train_gen = optimizer_gen.minimize(gen_loss, var_list=gen_vars)

train_disc = optimizer_disc.minimize(disc_loss, var_list=disc_vars)

# Initialize the variables (i.e. assign their default value)

init = tf.global_variables_initializer()

# Start Training

# Start a new TF session

sess = tf.Session()

# Run the initializer

sess.run(init)

# Training

for i in range(1, num_steps+1):

# Prepare Data

# Get the next batch of MNIST data (only images are needed, not labels)

batch_x, _ = mnist.train.next_batch(batch_size)

# Generate noise to feed to the generator

z = np.random.uniform(-1., 1., size=[batch_size, noise_dim])

# Train

feed_dict = {disc_input: batch_x, gen_input: z}

_, _, gl, dl = sess.run([train_gen, train_disc, gen_loss, disc_loss],

feed_dict=feed_dict)

if i % 2000 == 0 or i == 1:

print('Step %i: Generator Loss: %f, Discriminator Loss: %f' % (i, gl, dl))

Step 1: Generator Loss: 0.774581, Discriminator Loss: 1.300602

Step 2000: Generator Loss: 4.521158, Discriminator Loss: 0.030166

Step 4000: Generator Loss: 3.685439, Discriminator Loss: 0.125958

Step 6000: Generator Loss: 4.412449, Discriminator Loss: 0.097088

Step 8000: Generator Loss: 3.996747, Discriminator Loss: 0.150800

Step 10000: Generator Loss: 3.850827, Discriminator Loss: 0.225699

Step 12000: Generator Loss: 2.950704, Discriminator Loss: 0.279967

Step 14000: Generator Loss: 3.741951, Discriminator Loss: 0.241062

Step 16000: Generator Loss: 3.117743, Discriminator Loss: 0.432293

Step 18000: Generator Loss: 3.647199, Discriminator Loss: 0.278121

Step 20000: Generator Loss: 3.186711, Discriminator Loss: 0.313830

Step 22000: Generator Loss: 3.737114, Discriminator Loss: 0.201730

Step 24000: Generator Loss: 3.042442, Discriminator Loss: 0.454414

Step 26000: Generator Loss: 3.340376, Discriminator Loss: 0.249428

Step 28000: Generator Loss: 3.423218, Discriminator Loss: 0.369653

Step 30000: Generator Loss: 3.219242, Discriminator Loss: 0.463535

Step 32000: Generator Loss: 3.313017, Discriminator Loss: 0.276070

Step 34000: Generator Loss: 3.413397, Discriminator Loss: 0.367721

Step 36000: Generator Loss: 3.240625, Discriminator Loss: 0.446160

Step 38000: Generator Loss: 3.175355, Discriminator Loss: 0.377628

Step 40000: Generator Loss: 3.154558, Discriminator Loss: 0.478812

Step 42000: Generator Loss: 3.210753, Discriminator Loss: 0.497502

Step 44000: Generator Loss: 2.883431, Discriminator Loss: 0.395812

Step 46000: Generator Loss: 2.584176, Discriminator Loss: 0.420783

Step 48000: Generator Loss: 2.581381, Discriminator Loss: 0.469289

Step 50000: Generator Loss: 2.752729, Discriminator Loss: 0.373544

Step 52000: Generator Loss: 2.649749, Discriminator Loss: 0.463755

Step 54000: Generator Loss: 2.468188, Discriminator Loss: 0.556129

Step 56000: Generator Loss: 2.653330, Discriminator Loss: 0.377572

Step 58000: Generator Loss: 2.697943, Discriminator Loss: 0.424133

Step 60000: Generator Loss: 2.835973, Discriminator Loss: 0.413252

Step 62000: Generator Loss: 2.751346, Discriminator Loss: 0.403332

Step 64000: Generator Loss: 3.212001, Discriminator Loss: 0.534427

Step 66000: Generator Loss: 2.878227, Discriminator Loss: 0.431244

Step 68000: Generator Loss: 3.104266, Discriminator Loss: 0.426825

Step 70000: Generator Loss: 2.871485, Discriminator Loss: 0.348638

# Testing

# Generate images from noise, using the generator network.

n = 6

canvas = np.empty((28 * n, 28 * n))

for i in range(n):

# Noise input.

z = np.random.uniform(-1., 1., size=[n, noise_dim])

# Generate image from noise.

g = sess.run(gen_sample, feed_dict={gen_input: z})

# Reverse colours for better display

g = -1 * (g - 1)

for j in range(n):

# Draw the generated digits

canvas[i * 28:(i + 1) * 28, j * 28:(j + 1) * 28] = g[j].reshape([28, 28])

plt.figure(figsize=(n, n))

plt.imshow(canvas, origin="upper", cmap="gray")

plt.show()