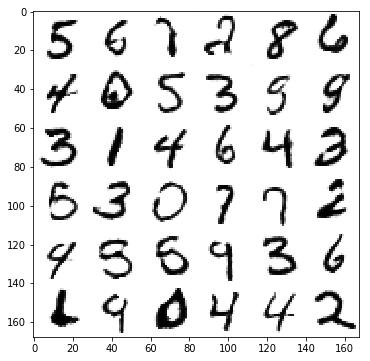

Deep Convolutional Generative Adversarial Network Example

Build a deep convolutional generative adversarial network (DCGAN) to generate digit images from a noise distribution with TensorFlow.

- Author: Aymeric Damien

- Project: https://github.com/aymericdamien/TensorFlow-Examples/

DCGAN Overview

References:

- Unsupervised representation learning with deep convolutional generative adversarial networks. A Radford, L Metz, S Chintala, 2016.

- Understanding the difficulty of training deep feedforward neural networks. X Glorot, Y Bengio. Aistats 9, 249-256

- Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. Sergey Ioffe, Christian Szegedy. 2015.

MNIST Dataset Overview

This example is using MNIST handwritten digits. The dataset contains 60,000 examples for training and 10,000 examples for testing. The digits have been size-normalized and centered in a fixed-size image (28x28 pixels) with values from 0 to 1. For simplicity, each image has been flattened and converted to a 1-D numpy array of 784 features (28*28).

More info: http://yann.lecun.com/exdb/mnist/

from __future__ import division, print_function, absolute_import

import matplotlib.pyplot as plt

import numpy as np

import tensorflow as tf

# Import MNIST data

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets("/tmp/data/", one_hot=True)

Extracting /tmp/data/train-images-idx3-ubyte.gz

Extracting /tmp/data/train-labels-idx1-ubyte.gz

Extracting /tmp/data/t10k-images-idx3-ubyte.gz

Extracting /tmp/data/t10k-labels-idx1-ubyte.gz

# Training Params

num_steps = 10000

batch_size = 128

lr_generator = 0.002

lr_discriminator = 0.002

# Network Params

image_dim = 784 # 28*28 pixels * 1 channel

noise_dim = 100 # Noise data points

# Build Networks

# Network Inputs

noise_input = tf.placeholder(tf.float32, shape=[None, noise_dim])

real_image_input = tf.placeholder(tf.float32, shape=[None, 28, 28, 1])

# A boolean to indicate batch normalization if it is training or inference time

is_training = tf.placeholder(tf.bool)

#LeakyReLU activation

def leakyrelu(x, alpha=0.2):

return 0.5 * (1 + alpha) * x + 0.5 * (1 - alpha) * abs(x)

# Generator Network

# Input: Noise, Output: Image

# Note that batch normalization has different behavior at training and inference time,

# we then use a placeholder to indicates the layer if we are training or not.

def generator(x, reuse=False):

with tf.variable_scope('Generator', reuse=reuse):

# TensorFlow Layers automatically create variables and calculate their

# shape, based on the input.

x = tf.layers.dense(x, units=7 * 7 * 128)

x = tf.layers.batch_normalization(x, training=is_training)

x = tf.nn.relu(x)

# Reshape to a 4-D array of images: (batch, height, width, channels)

# New shape: (batch, 7, 7, 128)

x = tf.reshape(x, shape=[-1, 7, 7, 128])

# Deconvolution, image shape: (batch, 14, 14, 64)

x = tf.layers.conv2d_transpose(x, 64, 5, strides=2, padding='same')

x = tf.layers.batch_normalization(x, training=is_training)

x = tf.nn.relu(x)

# Deconvolution, image shape: (batch, 28, 28, 1)

x = tf.layers.conv2d_transpose(x, 1, 5, strides=2, padding='same')

# Apply tanh for better stability - clip values to [-1, 1].

x = tf.nn.tanh(x)

return x

# Discriminator Network

# Input: Image, Output: Prediction Real/Fake Image

def discriminator(x, reuse=False):

with tf.variable_scope('Discriminator', reuse=reuse):

# Typical convolutional neural network to classify images.

x = tf.layers.conv2d(x, 64, 5, strides=2, padding='same')

x = tf.layers.batch_normalization(x, training=is_training)

x = leakyrelu(x)

x = tf.layers.conv2d(x, 128, 5, strides=2, padding='same')

x = tf.layers.batch_normalization(x, training=is_training)

x = leakyrelu(x)

# Flatten

x = tf.reshape(x, shape=[-1, 7*7*128])

x = tf.layers.dense(x, 1024)

x = tf.layers.batch_normalization(x, training=is_training)

x = leakyrelu(x)

# Output 2 classes: Real and Fake images

x = tf.layers.dense(x, 2)

return x

# Build Generator Network

gen_sample = generator(noise_input)

# Build 2 Discriminator Networks (one from noise input, one from generated samples)

disc_real = discriminator(real_image_input)

disc_fake = discriminator(gen_sample, reuse=True)

# Build the stacked generator/discriminator

stacked_gan = discriminator(gen_sample, reuse=True)

# Build Loss (Labels for real images: 1, for fake images: 0)

# Discriminator Loss for real and fake samples

disc_loss_real = tf.reduce_mean(tf.nn.sparse_softmax_cross_entropy_with_logits(

logits=disc_real, labels=tf.ones([batch_size], dtype=tf.int32)))

disc_loss_fake = tf.reduce_mean(tf.nn.sparse_softmax_cross_entropy_with_logits(

logits=disc_fake, labels=tf.zeros([batch_size], dtype=tf.int32)))

# Sum both loss

disc_loss = disc_loss_real + disc_loss_fake

# Generator Loss (The generator tries to fool the discriminator, thus labels are 1)

gen_loss = tf.reduce_mean(tf.nn.sparse_softmax_cross_entropy_with_logits(

logits=stacked_gan, labels=tf.ones([batch_size], dtype=tf.int32)))

# Build Optimizers

optimizer_gen = tf.train.AdamOptimizer(learning_rate=lr_generator, beta1=0.5, beta2=0.999)

optimizer_disc = tf.train.AdamOptimizer(learning_rate=lr_discriminator, beta1=0.5, beta2=0.999)

# Training Variables for each optimizer

# By default in TensorFlow, all variables are updated by each optimizer, so we

# need to precise for each one of them the specific variables to update.

# Generator Network Variables

gen_vars = tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES, scope='Generator')

# Discriminator Network Variables

disc_vars = tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES, scope='Discriminator')

# Create training operations

# TensorFlow UPDATE_OPS collection holds all batch norm operation to update the moving mean/stddev

gen_update_ops = tf.get_collection(tf.GraphKeys.UPDATE_OPS, scope='Generator')

# `control_dependencies` ensure that the `gen_update_ops` will be run before the `minimize` op (backprop)

with tf.control_dependencies(gen_update_ops):

train_gen = optimizer_gen.minimize(gen_loss, var_list=gen_vars)

disc_update_ops = tf.get_collection(tf.GraphKeys.UPDATE_OPS, scope='Discriminator')

with tf.control_dependencies(disc_update_ops):

train_disc = optimizer_disc.minimize(disc_loss, var_list=disc_vars)

# Initialize the variables (i.e. assign their default value)

init = tf.global_variables_initializer()

# Start Training

# Start a new TF session

sess = tf.Session()

# Run the initializer

sess.run(init)

# Training

for i in range(1, num_steps+1):

# Prepare Input Data

# Get the next batch of MNIST data (only images are needed, not labels)

batch_x, _ = mnist.train.next_batch(batch_size)

batch_x = np.reshape(batch_x, newshape=[-1, 28, 28, 1])

# Rescale to [-1, 1], the input range of the discriminator

batch_x = batch_x * 2. - 1.

# Discriminator Training

# Generate noise to feed to the generator

z = np.random.uniform(-1., 1., size=[batch_size, noise_dim])

_, dl = sess.run([train_disc, disc_loss], feed_dict={real_image_input: batch_x, noise_input: z, is_training:True})

# Generator Training

# Generate noise to feed to the generator

z = np.random.uniform(-1., 1., size=[batch_size, noise_dim])

_, gl = sess.run([train_gen, gen_loss], feed_dict={noise_input: z, is_training:True})

if i % 500 == 0 or i == 1:

print('Step %i: Generator Loss: %f, Discriminator Loss: %f' % (i, gl, dl))

Step 1: Generator Loss: 3.590350, Discriminator Loss: 1.907586

Step 500: Generator Loss: 1.254698, Discriminator Loss: 1.005236

Step 1000: Generator Loss: 1.730409, Discriminator Loss: 0.837684

Step 1500: Generator Loss: 1.962198, Discriminator Loss: 0.618827

Step 2000: Generator Loss: 2.767945, Discriminator Loss: 0.378071

Step 2500: Generator Loss: 2.370605, Discriminator Loss: 0.561247

Step 3000: Generator Loss: 3.427798, Discriminator Loss: 0.402951

Step 3500: Generator Loss: 4.904454, Discriminator Loss: 0.554856

Step 4000: Generator Loss: 4.045284, Discriminator Loss: 0.454970

Step 4500: Generator Loss: 4.577699, Discriminator Loss: 0.687195

Step 5000: Generator Loss: 3.476081, Discriminator Loss: 0.210492

Step 5500: Generator Loss: 3.898139, Discriminator Loss: 0.143352

Step 6000: Generator Loss: 4.089877, Discriminator Loss: 1.082561

Step 6500: Generator Loss: 5.911457, Discriminator Loss: 0.154059

Step 7000: Generator Loss: 3.594872, Discriminator Loss: 0.152970

Step 7500: Generator Loss: 6.067883, Discriminator Loss: 0.084864

Step 8000: Generator Loss: 6.737456, Discriminator Loss: 0.402566

Step 8500: Generator Loss: 6.630128, Discriminator Loss: 0.034838

Step 9000: Generator Loss: 6.480587, Discriminator Loss: 0.427419

Step 9500: Generator Loss: 7.200409, Discriminator Loss: 0.124268

Step 10000: Generator Loss: 5.479313, Discriminator Loss: 0.191389

# Testing

# Generate images from noise, using the generator network.

n = 6

canvas = np.empty((28 * n, 28 * n))

for i in range(n):

# Noise input.

z = np.random.uniform(-1., 1., size=[n, noise_dim])

# Generate image from noise.

g = sess.run(gen_sample, feed_dict={noise_input: z, is_training:False})

# Rescale values to the original [0, 1] (from tanh -> [-1, 1])

g = (g + 1.) / 2.

# Reverse colours for better display

g = -1 * (g - 1)

for j in range(n):

# Draw the generated digits

canvas[i * 28:(i + 1) * 28, j * 28:(j + 1) * 28] = g[j].reshape([28, 28])

plt.figure(figsize=(n, n))

plt.imshow(canvas, origin="upper", cmap="gray")

plt.show()