使用 TensorFlow 的简单的 GAN

您可以按照 Jupyter 笔记本中的代码ch-14a_SimpleGAN。

为了使用 TensorFlow 构建 GAN,我们使用以下步骤构建三个网络,两个判别器模型和一个生成器模型:

- 首先添加用于定义网络的超参数:

# graph hyperparameters

g_learning_rate = 0.00001

d_learning_rate = 0.01

n_x = 784 # number of pixels in the MNIST image

# number of hidden layers for generator and discriminator

g_n_layers = 3

d_n_layers = 1

# neurons in each hidden layer

g_n_neurons = [256, 512, 1024]

d_n_neurons = [256]

# define parameter ditionary

d_params = {}

g_params = {}

activation = tf.nn.leaky_relu

w_initializer = tf.glorot_uniform_initializer

b_initializer = tf.zeros_initializer

- 接下来,定义生成器网络:

z_p = tf.placeholder(dtype=tf.float32, name='z_p',

shape=[None, n_z])

layer = z_p

# add generator network weights, biases and layers

with tf.variable_scope('g'):

for i in range(0, g_n_layers): w_name = 'w_{0:04d}'.format(i)

g_params[w_name] = tf.get_variable(

name=w_name,

shape=[n_z if i == 0 else g_n_neurons[i - 1],

g_n_neurons[i]],

initializer=w_initializer())

b_name = 'b_{0:04d}'.format(i)

g_params[b_name] = tf.get_variable(

name=b_name, shape=[g_n_neurons[i]],

initializer=b_initializer())

layer = activation(

tf.matmul(layer, g_params[w_name]) + g_params[b_name])

# output (logit) layer

i = g_n_layers

w_name = 'w_{0:04d}'.format(i)

g_params[w_name] = tf.get_variable(

name=w_name,

shape=[g_n_neurons[i - 1], n_x],

initializer=w_initializer())

b_name = 'b_{0:04d}'.format(i)

g_params[b_name] = tf.get_variable(

name=b_name, shape=[n_x], initializer=b_initializer())

g_logit = tf.matmul(layer, g_params[w_name]) + g_params[b_name]

g_model = tf.nn.tanh(g_logit)

- 接下来,定义我们将构建的两个判别器网络的权重和偏差:

with tf.variable_scope('d'):

for i in range(0, d_n_layers): w_name = 'w_{0:04d}'.format(i)

d_params[w_name] = tf.get_variable(

name=w_name,

shape=[n_x if i == 0 else d_n_neurons[i - 1],

d_n_neurons[i]],

initializer=w_initializer())

b_name = 'b_{0:04d}'.format(i)

d_params[b_name] = tf.get_variable(

name=b_name, shape=[d_n_neurons[i]],

initializer=b_initializer())

#output (logit) layer

i = d_n_layers

w_name = 'w_{0:04d}'.format(i)

d_params[w_name] = tf.get_variable(

name=w_name, shape=[d_n_neurons[i - 1], 1],

initializer=w_initializer())

b_name = 'b_{0:04d}'.format(i)

d_params[b_name] = tf.get_variable(

name=b_name, shape=[1], initializer=b_initializer())

- 现在使用这些参数,构建将真实图像作为输入并输出分类的判别器:

# define discriminator_real

# input real images

x_p = tf.placeholder(dtype=tf.float32, name='x_p',

shape=[None, n_x])

layer = x_p

with tf.variable_scope('d'):

for i in range(0, d_n_layers): w_name = 'w_{0:04d}'.format(i)

b_name = 'b_{0:04d}'.format(i)

layer = activation(

tf.matmul(layer, d_params[w_name]) + d_params[b_name])

layer = tf.nn.dropout(layer,0.7)

#output (logit) layer

i = d_n_layers

w_name = 'w_{0:04d}'.format(i)

b_name = 'b_{0:04d}'.format(i)

d_logit_real = tf.matmul(layer,

d_params[w_name]) + d_params[b_name]

d_model_real = tf.nn.sigmoid(d_logit_real)

- 接下来,使用相同的参数构建另一个判别器网络,但提供生成器的输出作为输入:

# define discriminator_fake

# input generated fake images

z = g_model

layer = z

with tf.variable_scope('d'):

for i in range(0, d_n_layers): w_name = 'w_{0:04d}'.format(i)

b_name = 'b_{0:04d}'.format(i)

layer = activation(

tf.matmul(layer, d_params[w_name]) + d_params[b_name])

layer = tf.nn.dropout(layer,0.7)

#output (logit) layer

i = d_n_layers

w_name = 'w_{0:04d}'.format(i)

b_name = 'b_{0:04d}'.format(i)

d_logit_fake = tf.matmul(layer,

d_params[w_name]) + d_params[b_name]

d_model_fake = tf.nn.sigmoid(d_logit_fake)

- 现在我们已经建立了三个网络,它们之间的连接是使用损失,优化器和训练函数完成的。在训练生成器时,我们只训练生成器的参数,在训练判别器时,我们只训练判别器的参数。我们使用

var_list参数将此指定给优化器的minimize()函数。以下是为两种网络定义损失,优化器和训练函数的完整代码:

g_loss = -tf.reduce_mean(tf.log(d_model_fake))

d_loss = -tf.reduce_mean(tf.log(d_model_real) + tf.log(1 - d_model_fake))

g_optimizer = tf.train.AdamOptimizer(g_learning_rate)

d_optimizer = tf.train.GradientDescentOptimizer(d_learning_rate)

g_train_op = g_optimizer.minimize(g_loss,

var_list=list(g_params.values()))

d_train_op = d_optimizer.minimize(d_loss,

var_list=list(d_params.values()))

- 现在我们已经定义了模型,我们必须训练模型。训练按照以下算法完成:

For each epoch:

For each batch: get real images x_batch

generate noise z_batch

train discriminator using z_batch and x_batch

generate noise z_batch

train generator using z_batch

笔记本电脑的完整训练代码如下:

n_epochs = 400

batch_size = 100

n_batches = int(mnist.train.num_examples / batch_size)

n_epochs_print = 50

with tf.Session() as tfs:

tfs.run(tf.global_variables_initializer())

for epoch in range(n_epochs):

epoch_d_loss = 0.0

epoch_g_loss = 0.0

for batch in range(n_batches):

x_batch, _ = mnist.train.next_batch(batch_size)

x_batch = norm(x_batch)

z_batch = np.random.uniform(-1.0,1.0,size=[batch_size,n_z])

feed_dict = {x_p: x_batch,z_p: z_batch}

_,batch_d_loss = tfs.run([d_train_op,d_loss],

feed_dict=feed_dict)

z_batch = np.random.uniform(-1.0,1.0,size=[batch_size,n_z])

feed_dict={z_p: z_batch}

_,batch_g_loss = tfs.run([g_train_op,g_loss],

feed_dict=feed_dict)

epoch_d_loss += batch_d_loss

epoch_g_loss += batch_g_loss

if epoch%n_epochs_print == 0:

average_d_loss = epoch_d_loss / n_batches

average_g_loss = epoch_g_loss / n_batches

print('epoch: {0:04d} d_loss = {1:0.6f} g_loss = {2:0.6f}'

.format(epoch,average_d_loss,average_g_loss))

# predict images using generator model trained

x_pred = tfs.run(g_model,feed_dict={z_p:z_test})

display_images(x_pred.reshape(-1,pixel_size,pixel_size))

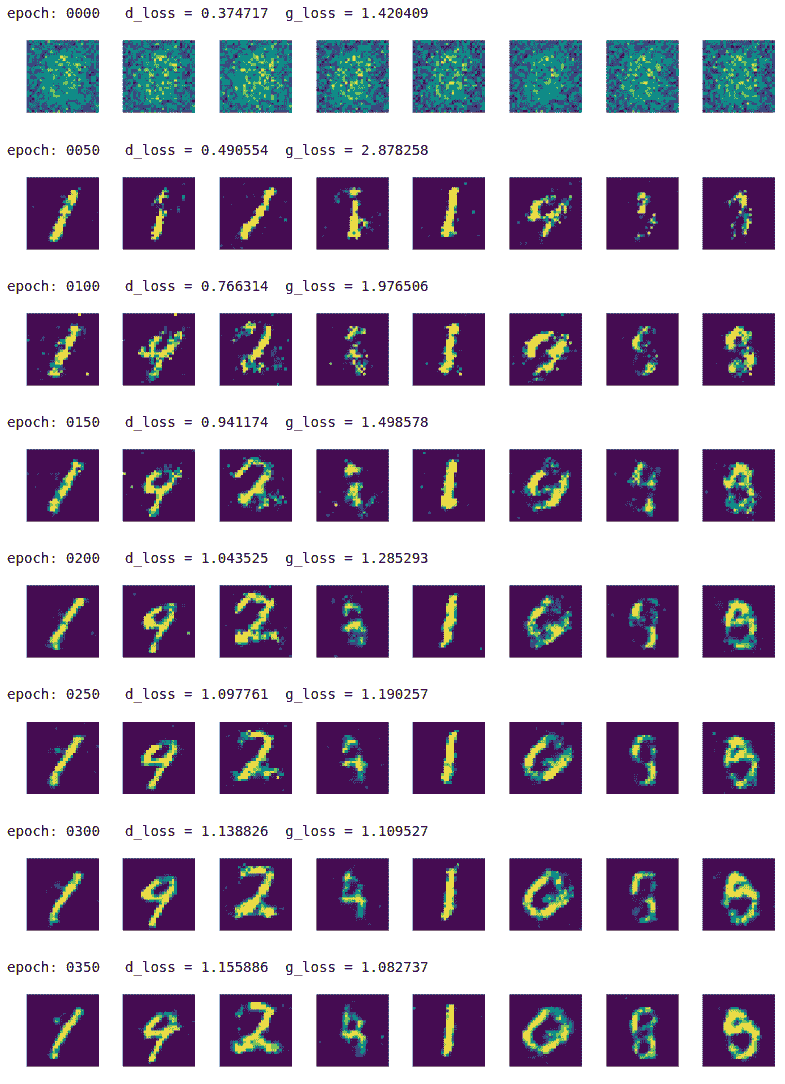

我们每 50 个周期印刷生成的图像:

正如我们所看到的那样,生成器在周期 0 中只产生噪声,但是在周期 350 中,它经过训练可以产生更好的手写数字形状。您可以尝试使用周期,正则化,网络架构和其他超参数进行试验,看看是否可以产生更快更好的结果。